When ChatGPT was born in 2022, it started to change the landscape of AI and how applications interact with us. We no longer see rigid, inflexible apps that just work based on predefined rules. Instead, ChatGPT has made these apps more intelligent, dynamic, and even empathetic in responding to users. The technology behind it has inspired the birth of various ChatGPT-like applications. This article aims to explain how to build an application like ChatGPT, bringing your business an edge across domains, from customer service to financial analytics.

What is a “ChatGPT-like” Application?

Informally, a ChatGPT-like application is a software product that can do what ChatGPT excels at. That involves understanding our natural language questions or requests and using its pretrained data to generate accurate, context-aware answers.

In other words, it aims to mimic human-like conversations to perform specific tasks (e.g., brainstorming essay ideas, summarizing meeting notes, or creating an image).

Technically, a ChatGPT-like application uses the power of large language models (LLMs) to provide an interactive, conversational experience to users.

These LLMs are already trained on massive datasets. They work by forecasting the next most likely word or phrase, capturing context, and creating new, suitable content for your requests.

LLM-based, or ChatGPT-like, applications inherit that capability. They appear in different forms, from web-based chatbots for customer service and voice assistants to “smart” features embedded into existing software.

These apps, typically Google Gemini or Microsoft Copilot, can serve general purposes. Meanwhile, others are designed for specialized tasks, like Salesforce’s Agentforce for customer service and marketing automation or GitHub Copilot for coding assistance.

Understanding the Core of ChatGPT-Like Applications

Behind the user-friendly interface of ChatGPT-like applications is the complicated system that covers a range of core components working together. At their core is a large language model (LLM) powered by cutting-edge technologies and components as follows:

1. Natural Language Processing (NLP)

NLP helps ChatGPT-like apps “read” and understand our everyday language. It splits input text, identifies grammar and relationships between words (“meaning”), and transforms words into formats the LLM can handle. With NLP, the model can’t interpret intent, sentiment, and even subtle cues (e.g., body language or tone of voice) in a conversation.

2. Machine Learning (ML)

Machine learning helps the model improve its answers over time. How? By learning patterns, word relationships, and contextual clues, the model can guess the next word or phrase in alignment with your intent. This lets it generate more coherent, relevant responses.

3. Deep Learning (DL) and Transformer Architecture

Almost all modern LLMs are built on the transformer architecture – a deep neural network. This architecture incorporates attention mechanisms that actively assess which words matter most, given the flow of conversation. Combined with advanced deep learning techniques like causal language modeling, RLHF, or MoE, the system handles more demanding tasks without missing a beat. The upshot? You get responses that maintain context, accuracy, and consistency throughout extended client interactions.

4. Vast or Specialized Training Data

With the right data, you can train ChatGPT-like applications for just about anything – from casual conversation to domain-specific tasks. Feed them broad, general datasets and they’ll handle everyday inquiries. Zero in with specialized info, and they’ll become experts in your industry, whether that’s about handling delivery complaints or combing through legal archives for relevant cases. Bottom line: the quality and focus of the data you use really shape how well these applications can support everything, from friendly chat to detailed, technical requests.

5. Supporting Infrastructure

Beyond the fundamental components, a complete ChatGPT-like application also requires robust infrastructure with powerful features. Some typical components include:

- APIs to connect the model with other external systems, like your company’s existing software.

- Data security measures to protect data privacy.

- Scalable cloud services to process many concurrent conversations or powerful hardware (like GPUs or specialized AI accelerators) to support inference or real-time data processing.

How to Create an Application Like ChatGPT

You’ve understood what ChatGPT-like applications are and what core components they have. We will now detail how you can create such an application. Follow this step-by-step guide and make adjustments to fit your unique demands:

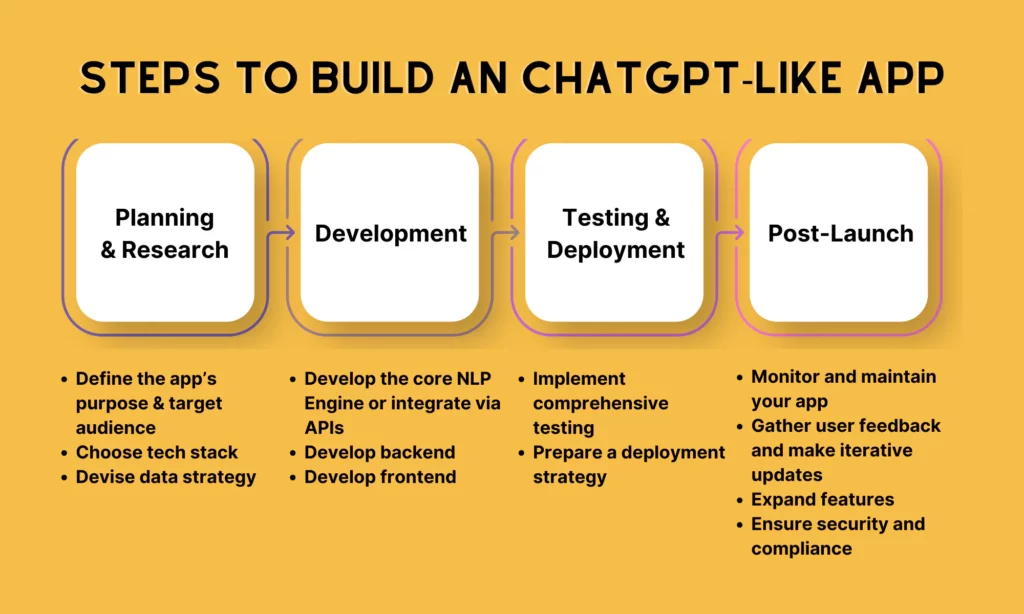

Phase 1: Planning and Research

The first and most important stage is building a solid, thorough plan for your potential app. This phase will lay the foundation for every business and technical decision to follow:

Define the Application’s Purpose and Target Audience

Start by identifying why your business needs the app and who the main audience is.

The main goal of the app often involves problems your company is facing and needs an app to resolve. These problems may include customer support, marketing automation, financial management, personal productivity, learning support, and other niches.

Besides the primary goal, you need to clarify who will use it in detail. This may involve their age range, locations, technical skills, or specific problems they are encountering. For example, you may want to introduce an AI math tutor that helps K6, K9, and K12 students in Alberta with PATs (Provincial Achievement Tests).

Then, you can describe user journeys to grasp how the audience will interact with the app. This helps you prepare for a curated list of essential features and select the right model size.

Technology Stack Selection

To design the technical aspects of the app, you need to choose the right technologies for your stack. This stack often covers the following main areas:

Natural Language Processing (NLP) Models

- Large Language Models (LLMs): As the backbone of ChatGPT-like applications, LLMs are a crucial component you have to consider first. Choose between:

- Open-source solutions like Llama or Falcon are the best if you want to handle your own infrastructure. They give you full oversight and can significantly cut down costs over time.

- API-driven solutions like OpenAI’s GPT or Google’s PaLM lets your developers focus on refining your app’s features and customer value, not staying up late patching backend models. But you may trade away some flexibility.

- Other NLP capabilities: You’ll likely bring in some NLP functions to support LLMs, like tokenization, sentiment analysis, or named entity recognition.

Backend Infrastructure: On the backend, your tech stack matters—a lot. Here’s what you need to focus on:

- Programming languages: Python is the industry standard for AI and machine learning, thanks to its robust libraries and an active developer community. If real-time interaction is a must, Node.js performs exceptionally well.

- Frameworks: Django and Flask are leading Python frameworks when you need a reliable API and data management. Pick the tools that match your business goals and technical requirements.

- Cloud services: Most organizations anchor their deployments on established cloud services (e.g., AWS, Google Cloud, or Azure) to enable scalability and handle GPU requirements.

Frontend Interface: Creating the client-side of the app needs:

- Web technologies: Some popular technologies like React, Vue.js, or Angular enable responsive browser experiences.

- Mobile development: You can consider React Native for cross-platform apps or Swift (iOS) and Kotlin (Android) for native apps.

Data Strategy

Data is an integral component in any ChatGPT-like application. Therefore, you should build a thorough data plan to ensure that the model will perform reliably and accurately. Accordingly, your data strategy involves:

- Data collection and cleaning: The data plan should clarify how relevant datasets will be collected for fine-tuning. Also, consider using suitable tools and methods to eliminate errors, inconsistencies, duplicates, and even sensitive information. These jobs prepare for clean, well-processed data so that the model can produce relevant, error-free responses.

- Ethical considerations and data privacy: One of the biggest problems in LLM-based applications is always ethical concerns and data security, as they primarily base answers on data. However, not all data should be fed to the model due to its sensitivity or confidentiality. To make sure the model will use the data responsibly, it should obtain user consent before gathering data. Further, it needs to comply by regulations (e.g., GDPR or CCPA) to ensure secure storage and avoid legal penalties.

Phase 2: Development and Implementation

After the first stage, you’ve created a clear vision, prepared the right technology stack, and developed a responsible data strategy for your app. Now, let’s turn your paper ideas into a working solution. This stage details how you can build the core language engine, the backend to power it, and the interface where users will interact with your app.

Core NLP Engine Development (or API Integration)

First, you need to identify whether you should develop your model or integrate a third-party API. Your chosen approach will decide the whole workload for the phase.

If you use an API, you should:

- Set up API keys to obtain credentials from the model provider, like OpenAI, Anthropic, or Google, then store these API keys securely in environment variables.

- Process requests and responses by doing the following things:

- Build a function to send user prompts to the API and receive generated text.

- Execute retry logic and error handling to manage rate limits or timeouts.

- Parse the API’s JSON response into a format your app can display.

If you fine-tune or build the language model from scratch, here are some steps you should focus on.

- Data preprocessing and tokenization:

- As we already said, data plays a very important role in any ChatGPT-like application. That’s why you should have appropriate preprocessing methods to clean and normalize your textual data. These methods often involve deduplicating data, fixing encoding, and removing sensitive information.

- Further, you can break the dataset down into tokens to make it compatible with your chosen model architecture.

- Model training or fine-tuning:

- You can start with a pre-trained transformer like Falcon or LLama and adapt it to domain-specific data.

- Using frameworks, such as PyTorch or TensorFlow, with distributed training helps process large datasets.

- You can monitor loss curves and use early stopping to prevent overfitting.

- Running the inference process:

- You need to create a service that loads the trained model, accepts user input, runs inference, and delivers the generated text back to the client in real-time.

- You can use techniques like model quantization or GPU acceleration for optimized latency.

Backend Development

Developing a robust server-side is important because it connects the NLP engine and the user interface. Here are several tasks you need to do for backend development:

- Build API endpoints for chat interactions: An endpoint is typically `POST /chat` where the frontend sends a message and receives a generated reply. When creating API endpoints, you should include parameters for user ID, session ID, and conversation history.

- Manage sessions: This often involves maintaining conversational context by keeping chat history in memory or databases. Further, you can leverage unique tokens to link each user with their outgoing conversation.

- Integrate the backend with the NLP model or API: This is done by calling your inference pipeline or the third-party API from inside the chat endpoint. Besides, ensure asynchronous processing to maintain low response times.

- Set up a database to store conversation history: If you want your ChatGPT-like app to have solid long-term memory, you’re going to need a robust database. Go for something professional, like PostgreSQL, MongoDB, or Redis. These databases are widely used to store user messages, timestamps, and bot responses efficiently.

- Set up security measures: Make data security a top priority. Implement encryption to safeguard information at rest, and use role-based access control (RBAC) to ensure only authorized personnel can access sensitive records. Basically, future-proof your system and keep your bases covered.

Frontend Development

Front-end development is basically where your entire chat app either shines or flops. You’ve got to nail it for users. Here’s how you aim for that high bar, business-style:

- Design a user-friendly interface:

- Your interface absolutely has to be clear and intuitive. No one’s got time to squint at cryptic icons or guess which button to click. Go with established frameworks, like React, Angular, Vue for web, or if we’re talking mobile, shoot for React Native, Swift, or Kotlin. Pick your stack with an eye toward reliability and future-proofing, not just the latest fad.

- Don’t neglect essential features, like message timestamps, typing indicators, etc.

- Clean visuals matter too. Make sure it’s easy to spot who’s speaking. Using avatars and color-coded speech bubbles boosts clarity and reduces user confusion.

- Ensure seamless communication between front and back end with secure HTTPS for all server communication to deliver messages to the `POST /chat` endpoint. For a truly engaging experience, integrate WebSockets or similar tech to deliver instant, real-time updates without delay.

- Optimize performance and accessibility. The interface must support screen readers and fast keyboard navigation, making the product usable for everyone. Meanwhile, don’t let load times drag; minimize bundle sizes and enable aggressive caching, as speed is part of customer experience now.

Phase 3: Testing and Deployment

Launching an app straight into the market without proper testing is a one-way ticket to disaster (and a flood of complaints). Before anything goes public, it’s essential to lock down a solid QA phase and a deployment game plan. Let’s break it down:

Comprehensive Testing

There are various methods you can include in your testing plan, depending on what your project requires. Below are some of them:

- Unit Testing: Each backend component – APIs, session management, database queries – needs to get verified. Trust us, issues at this level can kill user trust fast.

- Integration Testing: Ensure synergy between backend, frontend, and your NLP engine.

- Load and Stress Testing: Simulate peak loads—think Black Friday traffic. Know exactly how much your app can handle before anyone else does.

- Model Performance Evaluation: Metrics like perplexity and BLEU scores help measure the quality of your app’s responses, but pair them with real human reviews. One bad (or offensive) AI response is all it takes to go viral for the wrong reason.

- Security Testing: Sweep for vulnerabilities, like SQL injection, XSS, you name it. Encrypt data at rest and in transit.

- User Acceptance Testing (UAT): Let a select group of end-users test the waters. Their feedback helps your team improve the app’s usability and response accuracy..

Deployment Strategy

An effective deployment strategy will support growth and resilience. Below is what you need to include in the strategy:

- Infrastructure Setup: Rather than deploying your app on a single physical server, you should host it on cloud platforms like AWS, Google Cloud, or Azure. These cloud services offer the computing power, networking, and storage you need, even under growing traffic. Further, they come with auto-scaling groups to automatically add or remove servers when traffic changes.

- Containerization & Orchestration: By packaging your app in Docker containers and deploying it with Kubernetes, you can easily update and scale the app later in the future.

- CI/CD Pipeline: Use a CI/CD pipeline to automatically compile, test, and deploy new code to the live app.

- Monitoring & Logging: Leverage monitoring and logging tools like Prometheus or Grafana to track the app’s performance metrics, such as response times or error rates.

- Rollback Strategy: Prepare a backup plan to quickly restore to the previous version in case serious issues appear after launch.

Phase 4: Post-Launch and Future Improvements

You know, deploying the app is not the final phase of the project. If you want to keep your app high-performing and error-free, it’s crucial to continuously monitor it, gather feedback, and make necessary improvements. Below is what you need to do in this post-launch phase:

Monitor and maintain your app

- Track real-time performance metrics (e.g., API latency, server load, or model response times) to see how the app performs in real-world situations.

- Use app log data to spot issues like failed requests or timeouts. This lets your team debug instantly and release maintenance updates to avoid further errors.

- Plan the scaling of the infrastructure when traffic or data volumes increase to maintain high performance. Accordingly, you can either add more machines to the system (horizontal scaling) or add more resources to a single machine (vertical scaling).

Gather user feedback and make iterative updates

- Offer diverse feedback channels inside the app, like surveys, email forms, or support chat, so that real users can share their experiences.

- Make necessary updates based on user feedback and performance analytics.

Improve the model

- Gather anonymized conversation data while respecting privacy laws. Then, use the new data to fine-tune the LLM’s domain knowledge and precision.

- Adopt RLHF (Reinforcement Learning from Human Feedback) that involves human reviewers to evaluate and rank model responses. Then, adjust the model to meet user expectations continuously.

- Check whether the model produces harmful or biased outputs and update filtering mechanisms.

Always ensure security and compliance

- Continuously review security measures and data handling practices to meet privacy regulations.

- Perform security audits regularly to protect user information.

Expand features

- Add multimodal capabilities and integrate with external services (e.g., CRMs or payment gateways). Further, you may leverage user profiles or interests to offer more personalized, relevant responses.

Building a ChatGPT-Like Application with Designveloper

ChatGPT is one of the most powerful and common tools in today’s era. It empowers various applications to make them more intelligent and productive in various tasks, from marketing automation to customer service.

Through this blog post, we expect you to have a comprehensive understanding of what a ChatGPT-like application looks like and how to build a similar one.

Do you want to integrate the power of ChatGPT or any similar LLMs into your existing system? Or do you prefer developing a ChatGPT-like application from scratch? Regardless of your goals, Designveloper is willing to help you in this journey.

We are one of the best software and AI development companies in Vietnam. Since our foundation in 2013, we have helped clients across industries build more than 200 successful projects that streamline their workflows, increase productivity, and improve user experiences.

We’ve developed Rasa-powered applications that keep conversation data secure. We also integrate cutting-edge technologies, like LangChain or OpenAI, into enterprise applications to automate various business tasks. Further, we embed an automatic chatbot into a recruitment website to let employers list out wanted positions and match them with the proper candidates intelligently. We also build LLM-powered medical assistants to automatically spot and send health indicators of patients to a nurse’s devices.

Our solutions help our clients increase app traffic and attract more potential customers a few months after launch. We have received positive customer reviews for our on-time delivery, excellent communication, and dedication to excellence.

Do you want to access our talents to advance your AI journey? Let’s discuss your ideas now!

Read more topics

You may also like