What is LangGraph? Key Concepts, Use Cases, and How to Get Started

August 11, 2025

Imagine creating an AI application in which several language model agents collaborate on a complex task. It may be difficult to coordinate these agents, keep context across steps and achieve reliable results. LangGraph is a tool for solving this challenge. It is a framework on LangChain ecosystem that gives a way to define, coordinate, and execute multiple LLM (Large Language Model) agents in complex workflows. In other words, for anyone wondering what is LangGraph, it is a stateful orchestration framework for AI agents that gives developers more control over how those agents interact and solve problems.

LangChain launched LangGraph in 2023 as an addition to its toolkit, and it has rapidly become popular among AI developers. In June 2024, LangGraph achieved a stable release and almost 50 percent of the organizations that used LangChain LangSmith observability tool were using LangGraph in their applications. In this article, I will decompose the main ideas of LangGraph, discuss its applications with examples, and tell you how to start working with this powerful framework.

LangGraph at a Glance

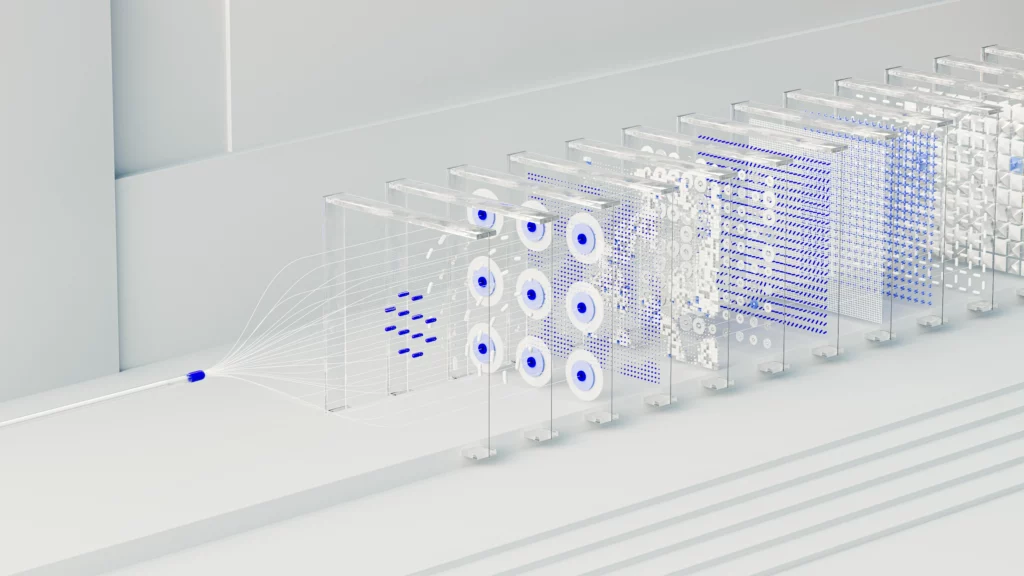

LangGraph is an open-source library from the LangChain team and free to use. It can be used in Python as well as JavaScript environments, which means that it can be used by a large number of developers. In contrast to more basic chain-based systems, LangGraph is a graph-based system that coordinates AI workflows. This means that instead of a linear sequence of steps, an application is modeled as a directed graph of nodes and edges.

The graph is composed of nodes (representing a unit of work, e.g. an LLM call or a tool invocation) and edges that characterize the flow of information and control between the units. LangGraph allows more complex, multi-agent interactions and decision-making processes than linear pipelines by modelling workflows as graphs (with the option of loops or cyclical flows). State management is automatically done by the framework across the graph and all agents get the context they require as the workflow continues. To put it briefly, LangGraph is about coordinating various AI elements to operate in coordination, have memory of previous interactions, and enable complex control flows within an application.

Is LangGraph free and open source? Yes. LangGraph is released under the MIT open-source license and is free to use for any project. The core LangGraph library can be self-hosted and integrated into your own infrastructure without cost. For production deployments, the LangChain team also offers LangGraph Platform as a managed service (with additional features like a hosted server, developer studio, and scaling options), but using the LangGraph open-source framework itself does not require any subscription. This makes LangGraph an attractive option for developers and companies who want full control over their AI agent workflows without vendor lock-in.

FURTHER READING: |

1. 10 Mobile App Development Tips You Need to Know |

2. 8 Mobile App Development Software (Features & Price) |

3. Let’s Face It: Is Mobile App Development Dying? |

Key Concepts of LangGraph

LangGraph proposes some main concepts that make it different to the simpler orchestration tools. These concepts are important to understand what does LangGraph do internally and how does it make advanced AI applications possible. The fundamental ideas are graph structure, state management, and agent coordination, and additional features (persistent and human interaction). These concepts are summarized below:

Graph Structure

LangGraph represents an AI application as a directed graph. The graph nodes are discrete units of work or computation, usually Python functions that do something like call an LLM, query a database, call a tool or API, or implement business logic. Edges are the links between nodes which define the flow of execution. An edge may be a straight path or a conditional branch that sends the workflow in one direction or another depending on the result of a node (like an if-else decision).

This graph-based method introduces clarity and modularity: each node performs a certain sub-task, and the edges determine the way the system moves between steps. LangGraph allows cycles (loops) in the graph, and thus supports iterative processes, where an agent may repeat steps as required, which is not easy to achieve in a strictly linear chain-based system. This graph structure therefore forms the skeleton of the construction of complex and non-linear workflows.

State Management

In multi-step AI processes, it is essential to be able to track information (memory) between steps. LangGraph has automatic state management to preserve and pass context across the graph. The state is a common object (such as a dictionary or Pydantic model) that nodes may read and write to during the execution of the program.

An example is in a chatbot application whose state could be used to save the history of the conversation so that the AI could remember what was said previously. The state may contain intermediate results or user preferences in a data analysis agent. LangGraph keeps this state dynamic as agents go about their work. Since the state is centrally managed, any agent (node) can always access the most up-to-date context that it requires to perform its task. This aspect takes the pressure off the developers to explicitly pass context around or deal with global variables. Rather, LangGraph provides consistency and continuity of data which is vital in ensuring coherence in complex conversations or multi-step calculations. One of the most impressive features of LangGraph is automatic state management which makes development easier and error-free by managing the context behind the scenes.

Agent Coordination and Control Flow

LangGraph is simply about the coordination of multiple agents (or chain components) to work towards a goal. It makes sure that the agents act in the right sequence, under the appropriate conditions and with the required information shared among them.

This coordination implies that you can have specialized agents, e.g. one agent to plan a task, another agent to retrieve information, another agent to produce a final answer, all within one coordinated system. LangGraph allows such advanced control flow constructs as loops (cycles) and conditional branches, allowing agents to make decisions and even re-attempt or re-edit steps when necessary. LangGraph enables high-level logic development by developers without concern over low-level scheduling of agent actions by controlling the flow of execution. It is in a sense the project manager of your AI agents, ensuring that each piece of the workflow occurs when it should and there is relay of the correct data to the next step. This results in more deterministic and debuggable behavior than allowing an LLM agent to run in a runaway loop. The outcome is sound, organized orchestration of AI work.

Multi-Agent Support

LangGraph is particularly suitable to support multi-agent systems due to its graph design and statefulness. In a multi-agent system, various AI agents perform different subtasks (e.g. one agent may be specialized in knowledge retrieval whereas another agent is specialized in reasoning or decision-making). LangGraph gives the support structure to these agents to communicate and collaborate. The agents may be modeled as nodes, and the graph edges and shared state determine their interactions.

Such a modular design has advantages such as specialization (each agent can be configured or triggered to perform its particular task) and simplified troubleshooting (you can identify which node/agent may be causing a problem). LangGraph can help create multi-agent workflows to address complex problems in which the work is broken down and then the results are combined by the orchestrator. As an example, a multi-agent research assistant could be designed with one agent retrieving relevant documents, another agent processing the content to find answers, and a third agent generating a coherent report, all running in a single LangGraph workflow.

Persistence and Debugging

LangGraph has a persistence layer through checkpointers that is able to save the state of the graph at different points. This makes possible such powerful features as time travel (replaying or exploring alternative execution paths) and fault tolerance (resuming at a checkpoint in case something goes wrong). Further, LangGraph allows breakpoints to halt execution, and even a human-in-the-loop, where a human can step in at specific steps.

These properties are essential to debugging complex agent behavior, and to applications where human confirmation is required (e.g. an AI agent may wait until a human confirms an answer before continuing). Another feature is streaming support- LangGraph supports streaming token-by-token outputs of LLMs to offer real-time feedback within user interfaces. All these complex features render LangGraph as production ready where robustness and user experience are priorities. They also point out the attention that the framework pays to providing the developers with visibility and control over the thought process of the agent, rather than treating the agent as a black box.

To conclude, the main ideas of LangGraph are based on the management of complexity with the help of a graph model. Graph structures provide flexibility in workflow design; state management makes sure that context is maintained; coordination and control flow tools enable complex logic and multi-agent systems; and built-in capabilities such as persistence, streaming, and human-in-loop support make it feasible in real-world, error-tolerant systems. It is this set of features that has led to LangGraph being referred to as adding a degree of control to agent workflows over previous agent frameworks.

Common Use Cases of LangGraph

LangGraph is a general framework, but it truly shines in scenarios where simple linear chains are not enough. Here are some concrete examples and use cases of LangGraph in action:

Advanced Customer Service Chatbots

LangGraph is used by companies to create smart customer support chatbots that are not limited to Q&A. As an example, a chatbot with LangGraph can remember previous orders or preferences of a user stored in the shared state and utilize that information to personalize a response. It can deal with multi-step requests (such as checking an order status, followed by making a return), and can even escalate to a human agent when required at a breakpoint. This enhances customer service by delivering faster, context-sensitive responses and human-in-the-loop handoff to handle more complex problems.

Research Assistant Agents

LangGraph is able to organize agents as a research assistant to analysts or students. One agent may search the scholarly articles or data sources, another one may extract and summarize the main points of the sources, and the third agent may create a report or provide answers to the questions. This type of system is able to automatically collect information and bring out key insights on large sets of documents. The graph workflow makes sure that the search and summarize steps occur sequentially and that the summary agent receives all the information it requires. The use case shows that LangGraph can control retrieval-augmented generation (RAG) in a systematic manner.

Personalized Learning Systems

LangGraph can drive tutoring or e-learning assistants that can be customized to the learner in the field of education technology. As an example, an intelligent tutor may employ one agent to evaluate the performance of a student on practice questions, and another agent to create new exercises or explanations depending on the weak areas of the student. The state would monitor the progress and preferences of the learner. LangGraph enables such a system to adapt the content dynamically- in effect a customized curriculum planner and tutor in one. The advantage is a personalized learning process in which the AI keeps track of what the student has not grasped and returns to review the same.

Business Process Automation

Companies are using LangGraph to automate and simplify their internal processes. As an example, take an agent that would review incoming documents (forms, invoices, etc) to approve them: one node would use an LLM to extract key information about the document, another would check it against a database, and a final agent would make the decision to approve or flag the document to be reviewed by a human. In the same way, project management activities or data analysis can be shared among several agents (one gathers data, another interprets it, another makes a report). With LangGraph, these processes are turned into automated pipelines capable of accommodating decision points and exceptions.

An example is a LangGraph workflow that automates a multi-step process of generating a report that once involved a number of human handoffs. The outcome is enhanced productivity and minimization of human error because the routine tasks are taken care of by the coordinated agents.

Complex Multi-Step Reasoning Tasks

Whenever an AI solution requires reasoning through a series of steps (tool use, calculations, intermediate conclusions), LangGraph is a strong fit. Developers have used LangGraph to build systems like coding assistants (where the agent writes code, tests it, debugs, and tries again in a loop), data pipelines that require cleaning data then applying multiple AI models in sequence, or decision-support systems that weigh different factors via separate agents. The graph approach handles these branching or looping workflows gracefully, whereas a simple chain might fail if a step needs to be revisited or if parallel paths need to converge.

These examples illustrate that LangGraph’s use cases typically involve interactive and intelligent AI agents working in concert. From customer support to research, education, and enterprise automation, LangGraph enables AI solutions that are context-aware, can handle multiple subtasks, and can incorporate logic beyond a single prompt-response. Notably, the rise of such agentic workflows is reflected in industry trends – by late 2024, 43% of organizations using LangSmith (LangChain’s monitoring suite) were sending LangGraph traces, indicating a significant uptick in complex multi-step AI applications. This suggests that teams across various domains find value in LangGraph to build more reliable and controllable AI systems than what was possible with earlier one-shot or single-agent approaches.

Getting Started with LangGraph

Now that we have covered what LangGraph is and why it’s useful, let’s look at how to get started with LangGraph. Despite enabling complex functionality, LangGraph is designed to be developer-friendly. Here are the basic steps to start building with LangGraph:

1. Installation

You can install LangGraph via pip, as it is available on PyPI. Run the following command in your Python environment to get the latest version:

pip install -U langgraph

This will install the LangGraph library (and its LangChain dependencies if not already present). LangGraph has both Python and JavaScript SDKs, so if you are working in a Node.js environment, you can install the JavaScript package via npm or yarn as well.

2. Define the Graph and State

The core of a LangGraph application is the StateGraph. You start by defining what the state will contain. For example, if building a chatbot, your state might include a list of messages. In Python, you can define a TypedDict or dataclass for the state. Then you create a StateGraph object using that state structure. This sets up an empty graph where each future node will automatically have access to an instance of that state. For instance:

from langgraph.graph import StateGraph

class State(TypedDict):

messages: list # to store conversation history

graph = StateGraph(State)

Here, we’ve defined a state with a single field messages which will hold a conversation log. The StateGraph(State) creates a new graph that knows this is the shape of its state.

3. Add Nodes (Agents) to the Graph

Next, you’ll add nodes to the graph. Each node is essentially a function (or an object with a run method) that performs a part of the task. You might have a node that calls an LLM, another node that calls a calculation tool, etc. Using our chatbot example, you could have one node that takes the user’s input and sends it to an LLM to get a response. You define a Python function for that behavior, then use graph.add_node(name, function) to add it to the graph. For example:

def chatbot_reply(state: State) -> dict:

user_message = state[“messages”][-1] # last message from user

response = llm.call(user_message) # call an LLM (pseudo-code)

return {“messages”: [response]} # append LLM response to messages

graph.add_node(“reply”, chatbot_reply)

In this snippet, “reply” is the node name. The function chatbot_reply reads the latest user message from state and returns a new message from the LLM. LangGraph will handle merging that return value into the global state (using special helpers like add_messages to append rather than overwrite, in this case). You can add as many nodes as needed, each encapsulating a piece of your logic.

4. Connect Nodes with Edges

After defining nodes, you specify how they connect. An edge from Node A to Node B means that after A executes, B will execute next. If multiple edges branch out, you can create conditional execution paths (for example, an edge that only triggers if Node A’s result meets some criteria). In a simple flow, you might have a single path. In our chatbot, if we only have one node “reply” that should repeat on each user input, we can set the graph’s entry point and finish point to that node (creating a cycle):

graph.set_entry_point(“reply”)

graph.set_finish_point(“reply”)

This configuration indicates the graph starts at the “reply” node and also ends there, which effectively forms a loop: after finishing, it can start again when new input arrives. For more complex workflows, you would use graph.add_edge(node1, node2) for explicit transitions. For instance, you could connect a “plan” node to a “search” node to a “summarize” node in a research assistant scenario. The directed edges ensure the LangGraph orchestrator knows the sequence (or branching logic) to follow.

5. Compile and Run the Graph

Once nodes and edges are defined, you typically compile the graph into a runnable form. In LangGraph, calling compiled_graph = graph.compile() will prepare the graph (check for any issues, lock in the structure). You can then execute it by passing an initial state and letting it run. Using the chatbot example, you might run it in a loop:

compiled_graph = graph.compile()

while True:

user_input = input(“User: “)

if user_input in [“quit”, “exit”]:

break

# Start the graph with the new user message added to state

initial_state = {“messages”: [(“user”, user_input)]}

for event in compiled_graph.stream(initial_state):

# stream yields events as the LLM generates tokens

pass

# After streaming, the state will have the assistant’s answer appended

answer = compiled_graph.state[“messages”][-1][1] # assuming tuple (speaker, text)

print(“Assistant:”, answer)

The above pseudocode outlines how you might continuously take user input and feed it to the LangGraph for processing. The stream() function is used to get token-by-token output (so that the assistant’s answer can be displayed incrementally). After the graph finishes a cycle, the latest assistant message is retrieved from the state for display. This loop would continue until the user decides to quit.

For non-interactive workflows, you might simply call result = compiled_graph.run(initial_state) to get a final output after the sequence of nodes executes. The LangGraph documentation and tutorials provide examples of both synchronous execution and streaming.

6. Leverage Advanced Features

As you become comfortable, you can utilize LangGraph’s advanced capabilities. For example, you can insert breakpoints or conditional logic that halts the agent and awaits human confirmation (useful in high-stakes applications like medical or financial domains). You can also persist the state to a database at checkpoints so that long-running processes can resume after interruptions. LangGraph Studio, an IDE announced in 2024, allows you to visually debug and monitor your graph’s execution on your local machine. These tools can greatly enhance the development experience for complex graphs, but they build on the same fundamentals described above.

7. Resources and Tutorials

Getting started with LangGraph is best done with guided examples. The official LangChain documentation provides a Quick Start guide and conceptual docs. There are also community tutorials available. For instance, DataCamp offers a LangGraph tutorial that walks through building a simple chatbot step by step. Analytics Vidhya has a free LangGraph tutorial for beginners that includes hands-on examples like a support chatbot with tools and memory. (Note: Some people refer to it as a Langraph tutorial without the capital “G”, but it’s the same LangGraph framework.) These learning resources illustrate LangGraph examples in code, helping new users quickly pick up the concepts. By following a tutorial and experimenting with a small project, you can gain a practical understanding of LangGraph’s workflow approach in a few hours.

Conclusion

This guide explained what is langgraph and why teams use it for agentic workflows. The next step is to ship a real system. That is where we help.

We are Designveloper, a Vietnam-based web and software company in Ho Chi Minh City. We build production software across web, mobile, AI, and VoIP. Our team delivers agentic apps that use LangGraph for clear control flow, shared state, and human-in-the-loop checks.

At Designveloper, we design graphs with typed state, nodes, and conditional edges. We add checkpointers for recovery and breakpoints for review. Additionally, we can help you connect tools, APIs, data sources, and more. The goal is simple: reliable agents that your users can trust.

Typical engagements look like this:

- Discovery workshop (1–2 weeks): define tasks, tools, and guardrails.

- Proof of concept (2–4 weeks): build a small LangGraph with memory, tools, and evals.

- Production hardening: scale, add observability, and security reviews.

- Handoff and support: docs, runbooks, and training for your team.

Relevant solutions we ship include customer-support copilots, research agents, document-workflow bots, and data-ops automations. Each solution uses clear graphs, fast feedback loops, and measurable KPIs.

If you want a LangGraph pilot that proves value fast, talk to us. We can map your use case to a graph, pick the right tools, and deliver a working agent with strong observability. We move quickly, keep quality high, and align the roadmap with your business goals.

Read more topics

You may also like