Vector databases have gained traction in recent years. They play an important role in a complex AI system, aiming to facilitate and accelerate similarity search for information. So, what is a vector database, exactly? How does it work, and what is it mainly used for? You’ll find all the fundamentals of vector databases in today’s post. Keep reading!

What is a Vector?

Before learning about what a vector database is, let’s quickly find out the definition of a vector first. A vector is a quantity expressed by magnitude (“size”) and direction instead of a single number. Based on what we learn in maths and physics at grade school, for example, a vector is broken down into components: X and Y in a two-dimensional space.

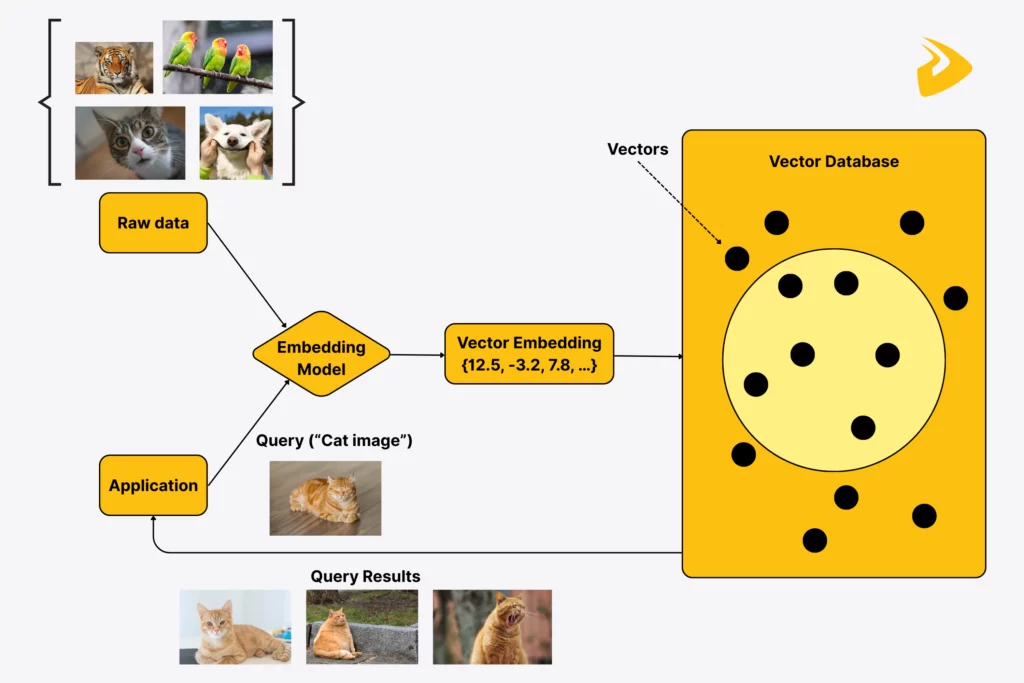

The concept of a vector remains the same in AI and machine learning, but extends to an n-dimensional space. In these sectors, a vector is an array of numerical values that correspond to different latent attributes of that data. These numbers exist in a multi-dimensional space and express the relationships and meaning of data points. Look at the “cat” image below:

The image is considered a vector in AI and machine learning after being embedded into numerical formats. It has various latent/hidden features, like “eyes,” “ears,” “fur color,” “claws,” or “texture histogram,” which have corresponding numbers.

So, why are vectors crucial in AI applications? This is because they represent complex things, like text, images, audio, and videos. By comparing numerical vectors, AI applications can identify semantically relevant content that may use different words or phrases. For example, after analyzing different dimensions of the image vector above, AI can search for the most similar (not exact) images. This also applies to other data types like text, audio, or video. When you ask AI about “cars,” it’ll use vectors to look for documents that contain not only “cars” but also other related terms like “automobiles” or “vehicles.”

What is a Vector Database?

A vector database is a container of the vectors we mentioned above, regardless of their type (e.g., text, images, audio, or videos). Its main goal is to store, index, and find such vectors for specific use cases. So, why are vector databases important?

Normally, traditional databases are used to handle structured data that has rigid formats and can be organized in rows and columns. However, in recent years, our world has been flooded with unstructured data, like text documents, social media posts, short videos, or podcast audios. This data is very large and makes up 90% of enterprise-generated data.

But previously, organizing and analyzing unstructured data weren’t easy, as turning them into structured formats was a labor-intensive and time-wasting task, let alone the human errors arising during the conversion process. Besides, traditional databases support exact keyword matches. This means that if you ask a database about “motorbikes,” it only returns results with the exact keywords and ignores other relevant documents that may contain synonyms or paraphrases, like “two-wheeled vehicles.” So, a vector database comes in. It not only handles unstructured data but also allows for more relevant searches.

Vector databases have that capability because they use optimized indexing methods and algorithms to calculate the distance of vectors in a high-dimensional space. The closer they are, the more relevant their items are. We take the cat image above as an example. The image vector includes an array of numbers: {12.5, -3.2, 7.8, …}. Comparing these numerical values with its stored vectors, a vector database can return some images with similar values representing shared visual patterns: {11.9, -2.8, 7.5, …} or {12.7, -3.1, 7.6, …}.

How are vector databases so fast?

Vector databases are fast as they often build indexes (specialized data structures) and use ANN (Approximate Nearest Neighbor) algorithms, like IVF (Inverted Files) or HNSW (Hierarchical Navigable Small World), to perform similarity search. In other words, they don’t check each vector, but mostly focus on narrowing the search space to get the most relevant results in milliseconds, even when they have to deal with millions or billions of vectors.

Besides, many also keep “hot” (frequently searched) data in memory for fast searches while integrating with storage options to optimize memory and store data for later use. Vector databases also use different distance measures, like Euclidean or cosine, to calculate the relevancy of vectors fast.

Another reason behind the high-speed performance of vector databases lies in their horizontal scaling and sharding. Accordingly, the databases can divide a large dataset into smaller, manageable pieces and distribute these chunks to various servers (nodes) for processing. With this capability, the databases can resolve concurrent queries and growing data volumes while maintaining high performance.

How many types of vector databases?

Vector databases come in different types, depending on the data types they handle, indexing techniques, storage models, and architecture. But in general, there are several commonly seen types of vector databases as follows:

Single-node

All vectors, indexes, and query handling lie in a single virtual or physical server. A single-node vector database is ideal for exploring, experimenting, or small production projects. It’s easy to install and run with lower deployment costs and easier maintenance. However, it’s limited by a single machine’s memory, so if the machine breaks down, the database goes offline.

Distributed

Vector databases like Milvus or Vespa have horizontal scaling and sharding. These capabilities allow vector databases to distribute large datasets to various servers (“shards”). So, when a query arrives, it’ll be sent to all relevant servers immediately, and a distributed vector database will merge the results from these nodes before returning the final outcome to a user. This type of vector database can handle large datasets that contain up to billions of vectors, as well as process a high query volume. Further, it can allow for scalability and fault tolerance. If one server fails, others still keep handling queries. However, because of its multi-server functionality, this type of database is more complex and costly to deploy and manage.

Cloud-based (Managed)

Instead of hosting and managing all vectors, indexes, and even metadata in your own infrastructure, you’ll have cloud platforms handle these complexities. Pinecone, Google Vertex AI Matching Engine, and Azure Cognitive Search are such cloud-based vector databases. They benefit companies that want to focus on their applications or work on unpredictable workloads and require automatic scaling to handle them. However, they’re a drawback if your team prioritizes full control over data privacy.

GPU-accelerated

Many vector databases use GPUs to perform complex calculations concurrently. They’re ideal if you want real-time search or recommendation where sub-millisecond latency is important. Further, they work best with very high query volumes or extremely large vector dimensions (e.g., >1,000). Combining GPUs will make vector databases more powerful in index creation and similarity search. However, one visible downside is that buying or renting GPUs costs more than CPUs.

What is the algorithm of a vector database?

A vector database doesn’t rely on only one algorithm but combines various types of algorithms to store, index, and find vectors effectively. At its core are similarity-search algorithms for ANN searches. Some popular ANN algorithms include:

- IVF (Inverted File): Group vectors into clusters and assign a specific centroid to each cluster. When querying, a vector database will find the centroids most relevant to the query vector and then only scan the corresponding clusters to find nearest neighbors.

- HNSW (Hierarchical Navigable Small World): Create a tree-like structure to search through the graph from the top layer to the bottom layer.

- PQ (Product Quantization): Compress vectors into smaller codes to save memory.

- LSH (Locality-Sensitive Hashing): Leverage special hash functions so that similar items (say, semantically similar sentences) use similar – or even the same – hash codes. This helps group vectors with similar hash codes into the same “bin” or neighboring bins.

Beyond these core algorithms, vector databases also leverage index-building algorithms (e.g., K-means or graph construction) and distance metrics (e.g., Euclidean, cosine, or inner product) to evaluate relevancy. Additionally, they use other algorithms to support storage and search, like sharding for distributed storage or caching & memory management for quick reads/writes.

What’s the difference between a vector index and a vector database?

When learning about the best vector databases, you may encounter the term “index.” But this terminology is used in the following two situations.

- First, it’s a standalone vector index, typically FAISS (Facebook AI Similarity Search).

- Second, it’s a specialized data structure you need to create inside a vector database. It organizes data in a searchable way so that a machine can find and retrieve the most relevant information to a user query.

Here, we want to talk about the first definition of a vector index. The main difference between a standalone vector index (FAISS) and a vector database (Pinecone, Milvus, Weaviate, etc.) is that the index lacks database-level capabilities, typically data management (e.g., upserting, updating, and deleting data). The index also has no built-in functionalities, like sharding, cluster management, or automatic backups.

Such an index as FAISS acts as an additional layer to vector databases, using its powerful capabilities (like diverse indexing techniques, multithreading, and GPU acceleration) to extend the inherent ability of databases. Meanwhile, a vector database is capable of managing data, integrating with ML models for automatic embedding, storing metadata for result filters, allowing for result reranking, and enabling distributed serving.

How Does a Vector Database Work?

A vector database works on the following three components: vector storage, vector indexing, and similarity search based on querying. Note that it doesn’t work separately, but plays an integral part of a complex AI system that covers other important factors like data loaders, embedding models, rerankers, and LLMs.

Vector storage

Vector databases don’t inherently embed the raw data into numerical representations. But common databases (e.g., Pinecone or Milvus) now host embedding models like OpenAI or CLIP. So, when you choose its hosted embedding model, the database will automatically convert the data into vectors (“vector embeddings”). The database then stores these embeddings and even their metadata to narrow down its search results.

Vector indexing

To facilitate and accelerate similarity searches in high-dimensional data spaces, vector databases need to index embeddings. This process takes place by creating indexes where vector embeddings are organized in an easy-to-search way. Vector databases now offer a wide range of optimized indexing techniques and corresponding algorithms (IVF, HNSW, PQ, etc.) to map the embeddings to specialized data structures. Depending on your data size and trade-offs between speed and accuracy, you should choose suitable indexing methods.

Querying & similarity search

When a user query comes, it is also converted using the same embedding model. When a vector database receives query vectors, it measures distances between vectors kept in the index and query vectors. The database can use either ANN algorithms or even flat (exact keyword) searches, and both to calculate similarity metrics. Based on similarity ranking, the vector database will return similar results.

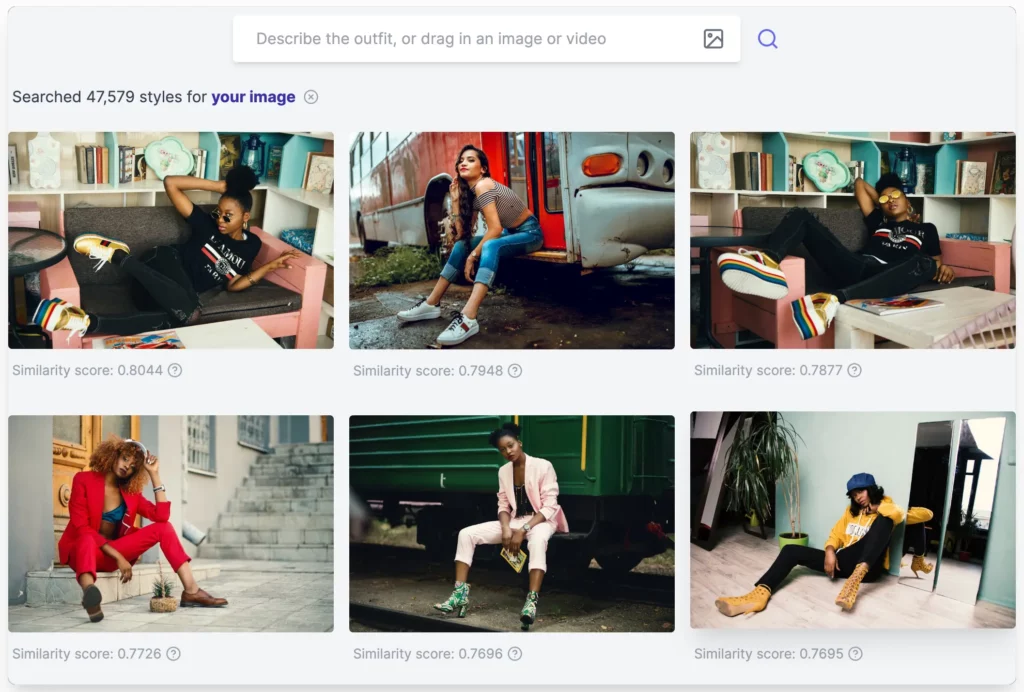

In an example below, we experimented with looking for similar images for the query “streetwear for women” on Shop The Look (a sample app built with Pinecone and Google Vertex AI). Each image-based result comes with the corresponding similarity score to show how relevant it is to our image input:

During similarity search, a vector database can filter results using metadata and reranking models. These tools allow for results that are contextually relevant to a given query.

Pros and Cons of Using Vector Databases

Vector databases are forecast to reach a global value of $3.04 billion in 2025. And the market will continue to grow to an estimated $7.13 billion by 2029. This is an inevitable result of the transformative benefits the databases bring to businesses:

- Vector databases are designed to search for the most similar content in a high-dimensional data space. They leverage different indexing techniques to speed up searching and optimize performance, even when your datasets are large (up to millions or billions of data points).

- Many vector databases come with built-in horizontal scaling and sharding to enable scalability. These capabilities allow you to add more servers or machines to the system, maintaining its optimal performance when data/query volumes grow.

- Vector databases offer built-in data management tools to keep your data always up-to-date and accurate. Accordingly, you can upload new data or update old data without complex manual steps.

- Vector databases support unstructured and multimodal data. They allow you to search for any data types as long as such data is converted using the right embedding model, so the databases can interpret, store, and find.

However, one noticeable downside of using vector databases is the accuracy of search results in comparison with brute-force search. As vector databases prioritize high-speed and memory-efficiency searching, they sometimes trade off less accuracy for this benefit. Besides, as high-dimensional vectors are very large, keeping their indexes in RAM for high speed may increase infrastructure costs. Also, several vector databases, like Pinecone, offer proprietary features, making it hard to move huge datasets between database providers.

10 Use Cases for Vector Databases

With powerful capabilities, vector databases are highly adaptable in various use cases. Below are their common applications:

Semantic Search

Vector databases differ from traditional databases in their semantic search. Instead of performing exact keyword matches, they transform text data into high-dimensional vectors that cover the meaning and relationships in data points. This allows vector databases to generate semantically relevant results to a query, even when they only contain synonyms or paraphrases.

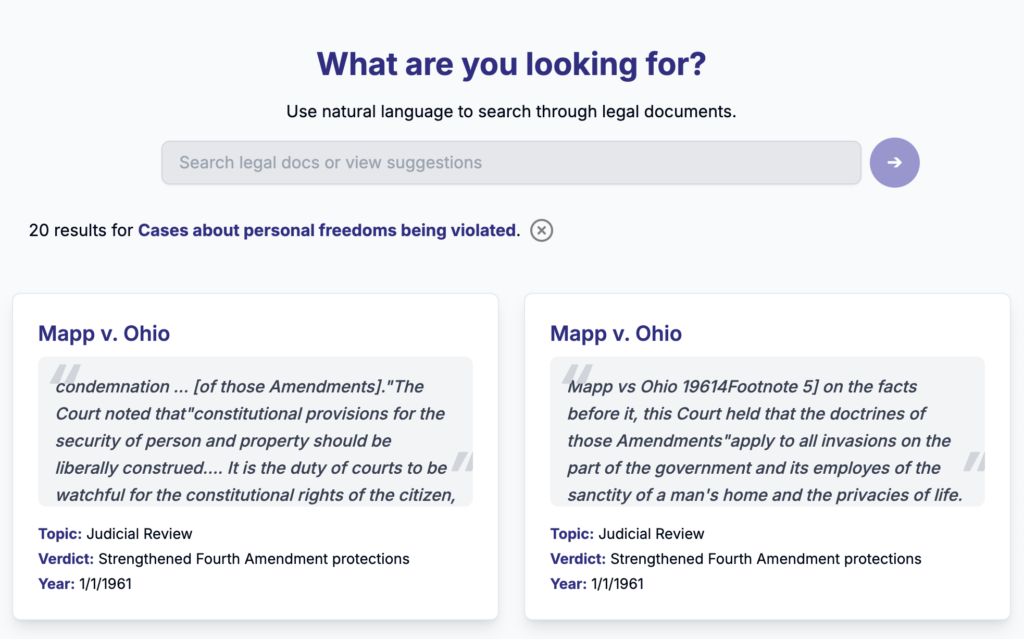

In the example below, we leveraged the sample app using Pinecone and OpenAI to search through legal documents for the query “Cases about personal freedoms being violated.” The results don’t include the same phrases but semantically similar content.

Similarity Search

Vector databases use indexing techniques and algorithms to perform similarity search. It identifies content that may not be identical in terms of wording or visual patterns, but is contextually relevant. As all the raw data is converted into numerical values, the databases can index and search for them based on querying or prompting. With this capability, a user can send a text-based request but receive image-based results.

Recommendation Engines

Vector databases support recommendation systems in finding and suggesting content similar to what a user has previously felt interested in. Whether the content is about e-commerce products, favorite movies, or pieces of music, the databases use ANN search to look for relevant items quickly. This leads to more accurate and personalized recommendations.

Retrieval-Augmented Generation (RAG)

RAG has become more prevalent these days, and vector databases are a crucial component in this pipeline. A RAG system aims to extend the inherent capabilities of LLMs. Instead of relying only on their pre-trained data that might be obsolete or irrelevant to specific use cases, LLMs can connect with external data sources through an RAG pipeline to gain factually grounded and contextually relevant information. Such information – regardless of its type (e.g., text or images) – is accessed and found efficiently thanks to vector databases. As we mentioned, the databases store, index, and perform similarity searches to identify nearest neighbors. This helps LLMs acquire the most similar information to a given query and return more accurate responses.

Generative AI & AI Agents

Vector databases act as a crucial “memory layer” in AI agents and GenAI applications. They store past conversations, events, and user preferences as dense vector embeddings. Whenever the AI receives a query, it searches through vector databases to extract previous information to continue chat sessions and provide consistent, yet reliable experiences for users.

Image, Video, and Audio Recognition

The ability of vector databases isn’t limited to text, but extends to multimodal data, like images, audio, and videos. Many databases now support a wide range of embedding models to convert any data types. For example, Pinecone supports NVIDIA’s llama-text-embed-v2 for text embedding and Jina AI’s jina-clip-v2 for multilingual & multimodal embedding. These integrated ML models facilitate the embedding of the raw input to numerical outputs, hence facilitating the search for different data types, whether images, short clips, or speech.

Anomaly Detection

Vector databases store the embeddings of raw inputs like user behavior, network traffic patterns, temperature trends in IoT data, etc. When new data comes and becomes embeddings using neural networks, the databases will compare this new vector against the stored “normal” embeddings to identify anomalies. With this capability, these databases prove extremely helpful in anomaly detection across domains, like finance, cybersecurity, healthcare, and industrial IoT.

Biometrics & Genomics

In biometrics and genomics, there are massive raw datasets, including fingerprints, facial images, voice samples, DNA sequences, and more. This structured data is challenging to process. But with AI advancements, those data points can be converted into feature vectors and stored in vector databases. Using ANN searches, the databases can search for the most relevant information to serve multiple use cases, like surveillance, authentication, drug development, or variant discovery.

Financial Services

Vector databases benefit financial institutions a lot. Entities like banks, fintech platforms, or trading firms often encounter large, complex data flows that include transaction histories, income patterns, news sentiment scores, market data, etc. Storing the vector embeddings of such data, the databases help AI assistants retrieve the truly necessary information to spot abnormal transactions, predict default risks, suggest investment products, and more.

E-commerce Personalization

Vector databases quickly align shoppers with the content and products most similar to their preferences. From product recommendations and personalized search results to dynamic content display, AI can extract the most relevant information through ANN searches inside the databases.

Getting Started With Vector Databases

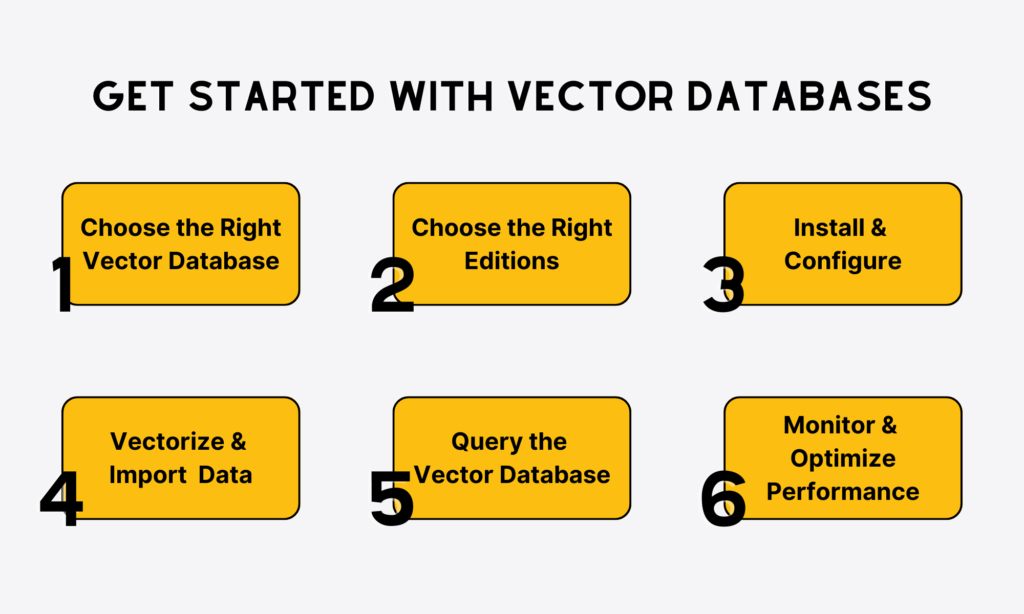

Do you want to integrate a vector database into your AI application to enhance its search function? Following these steps to start working with a vector database effectively:

Step 1 – Choose the Right Vector Database

There are various vector databases available in the market. Each comes with strengths and the best use cases. To choose the right option, you must consider your requirements, from ultimate goals to budget. Then, compare vector databases in numerous factors, like ease of use, performance, scalability, compatibility with your current enterprise systems, and even technical support from their providers. This comparison helps you identify the most suitable database for your project.

Beyond these factors, we also advise you to choose open-source vector databases (e.g., ChromaDB) or those offering free plans/tiers (e.g., Pinecone). This helps your team test the above factors of your chosen database before going to the final commitment.

Step 2 – Choose the Right Vector Database Editions

Many vector databases provide different editions to serve specific requirements. Milvus is a typical example. It comes with four editions: Milvus Lite, Milvus Standalone, Milvus Distributed, and Zilliz Cloud

- Milvus Lite is a lightweight version. You can install and operate it directly on your machine or embed it in Python applications. This edition offers core capabilities, such as diverse search methods (top-k, range, hybrid), indexing structures, data processing, and full CRUD (create, read, upsert/update & delete) operations. It’s suitable for quick prototyping and local deployments.

- Milvus Standalone has Milvus Lite’s core capabilities, but extends to scalability support, high-performance vector search, and multi-tenancy. As the name suggests, it operates separately as a single instance. This makes it ideal for situations where distributed or clustering setups are unnecessary and the data/query volume scale is small.

- Milvus Distributed allows your datasets and query volumes to be spread across various servers (“nodes”), beyond the inherent capabilities of Milvus Standalone. This edition works best for large-scale production.

- Zilliz Cloud is a managed version of Milvus. It handles all the complexities of database operations and frees up your development team to focus on your core applications.

Considering your project scope, data size, and budget, you can choose the right vector database edition.

Step 3 – Install and Configure the Chosen Vector Database

Now that you’ve chosen a vector database and the right edition, it’s time to install and configure it. Database providers now help you install and configure their products through easy-to-follow documentation, tutorials, and quickstart videos. The first step in database installation often involves using package managers like pip in your terminal window. You can customize the database setup (e.g., storage locations or indexing techniques) to align with your specific needs.

Step 4 – Vectorize and Import Your Data

Once you’ve installed the vector database, you need to feed it with vectors. You can vectorize the raw data using embedding models externally and load it to the database’s index. Otherwise, you can leverage the machine-learning models hosted within the database. Many common databases now integrate built-in embedding models to convert text-based and multimodal data automatically into numerical vectors. Opting for the integrated embedding models saves you much time and minimizes the complexities of the embedding process.

Step 5 – Query Your Vector Database

When a user query arrives, it’ll be embedded in the same way as the raw documents. Upon receiving the query vector, the vector database will compare it against the stored embeddings and calculate similarity scores to find which content is most relevant to the given query. Some vector databases, like Pinecone, also allow you to add metadata filtering to narrow the search space or use reranking models to reorganize the truly nearest neighbors before returning the fine-tuned results to an LLM for response generation.

Step 6 – Monitor and Optimize Performance

Over time, your vector database may perform worse due to growing data or query volumes. That’s why monitoring and optimizing the database is necessary to ensure optimized indexing and consistently high-speed search. Various vector databases (e.g., Pinecone or Milvus) come with built-in monitoring tools, such as dashboards or metrics to track their performance effectively.

When your database performs more slowly or uses too many resources, you should consider adjusting indexing parameters to balance speed, memory, and accuracy. Also, you should track query patterns and batch similar queries to reduce resource usage. If traffic keeps growing, consider adding more servers, using better memory options, or switching to more powerful hardware.

Conclusion

This blog post has given you a detailed overview of what a vector database is, how it works, and its best use cases in this AI era. But note that vector databases don’t work independently. You only make full use of their powerful capabilities when combining them with the right components to create seamless, high-quality AI workflows. If you’re looking for a trusted, experienced partner in developing such an AI system, contact Designveloper!

We’re the leading software and AI development company in Vietnam, with 12 years of operations. At Designveloper, we have an excellent team of 100+ skilled specialists who excel at building custom, scalable AI solutions across industries using cutting-edge technologies. We’ve built medical assistants that measure health indicators to provide crucial “spot” and “trend” data to a nurse’s device. Further, we integrated LangChain, OpenAI, and other modern technologies to automate support ticket triage for customer service. We also developed an e-commerce chatbot that offers product information, recommends suitable products, and automatically sends emails to users.

Beyond our proven track record, we’ve received positive feedback from clients for our good communication, on-time delivery, and strong UX capabilities. If you want to transform your existing software with AI integration, Designveloper is eager to help!

Read more topics

You may also like