When it comes to GPT, we often think of ChatGPT immediately, and it’s no wonder that many people often get these terms mixed up. But in fact, GPT is a powerful technology behind the success of ChatGPT and other generative AI tools. You’ve likely encountered it in the news and experimented with its conversational prowess. Since the first launch of ChatGPT in 2022, tools powered by GPT have sprung up like mushrooms and been rapidly integrated into our lives. But beneath GPT’s impressive benefits lies a fundamental question: What does GPT stand for, exactly?

If you’re curious about this technology and how it works to empower today’s GenAI tools, don’t miss this article! Let’s discover everything related to GPT with Designveloper, from its core parts and working mechanism to its potential future.

What Does GPT Stand For?

GPT is an acronym for Generative Pre-Trained Transformer. This is a type of large language model (LLM) and also a basic framework for generative AI. It functions as an intelligent neural network that machines use to process natural language prompts. Accordingly, it uses a transformer deep learning architecture, coupled with vast volumes of unlabeled data it’s pre-trained on, to create totally new content like human beings do. To better understand this technology, let’s analyze each core part of its name, including Generative, Pre-Trainer, and Transformer.

1. Generative

The name says it all. “Generative” here means a model’s ability to create text. Particularly, a generative model doesn’t repeat what it has seen. Instead, it learns the underlying patterns and complex relationships of words in a sentence to understand the context behind. Then, it adopts the learned knowledge to generate novel text.

Imagine a developer who has studied technical and coding theories. He/She then uses this understanding to write their own original code to create a completely new app. A generative model works in a similar way with text (and even other forms of data like images or videos).

This capability allows GPT-powered models like ChatGPT to create a broad range of text. They cover fictional stories, news articles, summaries, poems, conversational responses, and more.

2. Pre-trained

The concept of “pre-training” refers to training the generative model on large data sets of text and code before being assigned to perform specific tasks. It’s like a journalism student who needs a solid understanding of fundamentals (e.g., news collecting, fact checking, interviewing) before officially reporting on events and writing impactful stories.

Undergoing the pre-training phase, the model can learn the foundational patterns, rules, and relationships within the data. This knowledge is not confined only to common sense knowledge, grammar, and vocabulary, but also extends to contextual understanding.

When being trained, the model isn’t explicitly told which specific task it will eventually do. This helps the model acquire the underlying principles that can be broadly adopted to various situations. Besides, it’ll perform better when being fine-tuned for a certain task (e.g., question answering or translation) with a smaller dataset. This is because it already has a strong foundation of linguistic knowledge to perform that task well without requiring more task-specific data.

3. Transformer

A Transformer is a type of neural network architecture. It helps models like GPT overcome the limitations of previous dominant architectures like RNNs (Recurrent Neural Networks) in natural language processing.

Particularly, RNNs and other traditional sequential models often handled text word by word, making it hard to understand the relationships between words in a sequence. So, if a sentence contains important information at the beginning and the end, RNNs can struggle to connect them. But the Transformer can deal with this limitation thanks to its core “attention mechanism.” This mechanism allows the model to evaluate how relevant words in a sequence are, instead of only looking at each separate word. For this reason, the model can understand the contextual meaning of a sequence, regardless of its length.

Let’s take this sentence as an example:

The cat sat on the mat.

Traditional sequential models like RNNs only process this sentence word by word in the order: “The” → “cat” → “sat” → “on” → “the” → “mat.” But it hardly finds out the connections between these words.

The Transformer, on the other hand, calculates how, for example, the word “cat” is related to other words like “sat,” “on,” or “the.” This calculation helps the model recognize there’s a strong connection between “cat” and the two words: “sat” and “mat.” Accordingly, “sat” refers to the action of the subject (“cat”) while “mat” indicates a location of the subject. This allows the Transformer to understand the entire context of the sentence.

This capability makes the Transformer very crucial in tasks that require the understanding of long-range relationships. They involve performing accurate translations, understanding complex sentences, creating consistent long-form text, etc.

How Does GPT Work?

Combining all the core parts we explained above, the way GPT works is very straightforward. It uses a semi-supervised approach that combines unsupervised pre-training and supervised fine-tuning. This means the GPT-powered models are trained on massive volumes of unlabeled data and then fine-tuned to perform tasks on labeled data.

These models understand your natural language prompts thanks to the attention mechanism that allows GPT to calculate and identify the connections between words for contextual understanding. Instead of restating what the models have seen or learned, they create human-like content based on the prompts. This enables GPT models to significantly advance tasks related to natural language processing (NLP), like document generation or code creation.

The Evolution of GPT

So, how has GPT evolved? In fact, the concept of GP (Generative Pre-training) appeared a long time ago in machine learning applications. At that time, GP models already used a semi-supervised learning approach. They were, particularly, trained first to generate data points on unlabeled data sets and then trained to classify labeled datasets. However, they impossibly handled sequences with long-range dependencies.

This problem wasn’t solved until the 2010s when the attention mechanism was introduced. Google researchers then optimized and integrated this method into the transformer architecture. This laid a foundation for developing later large language models (LLMs), typically BERT in 2018. However, BERT was just a pre-trained transformer. Its goal was encoding only, instead of generating something new.

The term “GPT” was officially used when OpenAI published its research on this model in 2018. So if you ask, “Is the term GPT coined by OpenAI?” we’ll say yes. The company developed the first widely recognized and impactful GPT model that is capable of implementing generative language tasks at scale. Since that time, OpenAI has released a series of GPT-powered models that are sequentially numbered. The most recent of these, GPT-4.1, outperforms its predecessors, especially in instruction following and coding. Further, OpenAI also released GPT-4.5, a research preview (“early stage”) version for ChatGPT Pro users in February 2025.

5 Task-Specific GPT Tools

GPT lays a foundation for companies to build task-specific tools. Below are some typical examples:

1. ChatGPT

ChatGPT is an intelligent chatbot developed on OpenAI’s GPT models. It uses natural language processing to engage in human-like conversations. With ChatGPT, you can implement a wide range of tasks, including:

- Answering questions on a variety of topics (i.e., scientific research, healthcare, or math solving)

- Generating novel content (e.g., blog posts, news articles, emails, reports)

- Summarizing long documents

- Brainstorming ideas

- Translating languages

- Writing and debugging code

- Learning new skills

Beyond supporting professional tasks, ChatGPT can even function as a companion who listens to your personal stories and gives you motivation to overcome existing problems. The significant success of GPT turned its downstream application, ChatGPT, into the fastest-growing AI assistant in the world, with an impressive 800% growth in just 30 months and a monthly user base of 800 million. This allows ChatGPT to acquire an increasing in-app revenue during this time, from $731K (May 2023) to $108M (March 2025).

2. Salesforce’s Agentforce

Salesforce is a pioneer of integrating AI capabilities into CRM (Customer Relationship Management), initially with Einstein GPT, which used generative AI to empower sales, service, and marketing workflows. Based on this foundation, Salesforce continues to introduce Agentforce, which takes it a step further by creating autonomous AI agents in CRM. This AI tool allows your staff to automate complex, multi-step tasks.

- Sales AI: Write emails supported by customer data, summarize sales calls concisely, and derive actionable insights to support effective conversations. It even provides real-time predictions to guide sellers to close deals, build stronger relationships, and automate the sales process.

- Marketing AI: Offer insights to increase engagement, create highly tailored customer journeys, and automate custom outreach.

- Customer Service AI: Display relevant information in real-time during customer support interactions. This helps your agents solve issues faster, increasing their productivity and providing customers with more personalized experiences. Further, you can create AI agents to summarize case resolutions and create a knowledge base automatically.

- Commerce AI: Automatically create product descriptions, suggest relevant products, and offer seamless purchasing experiences.

3. BloombergGPT

BloombergGPTTM is a large language model developed by Bloomberg to serve specifically the financial industry. Trained on both financial data and general-purpose data volumes, it aims to help Bloomberg improve existing financial NLP tasks, such as:

- Answering finance-related questions

- Analyzing the sentiment in financial news and social media

- Identifying and classifying financial entities (e.g., companies, people)

- Categorizing financial news

- Translating human language queries into Bloomberg Query Language (Bloomberg’s proprietary language) to access and analyze financial data available in the Bloomberg Terminal

- Helping journalists create news headlines.

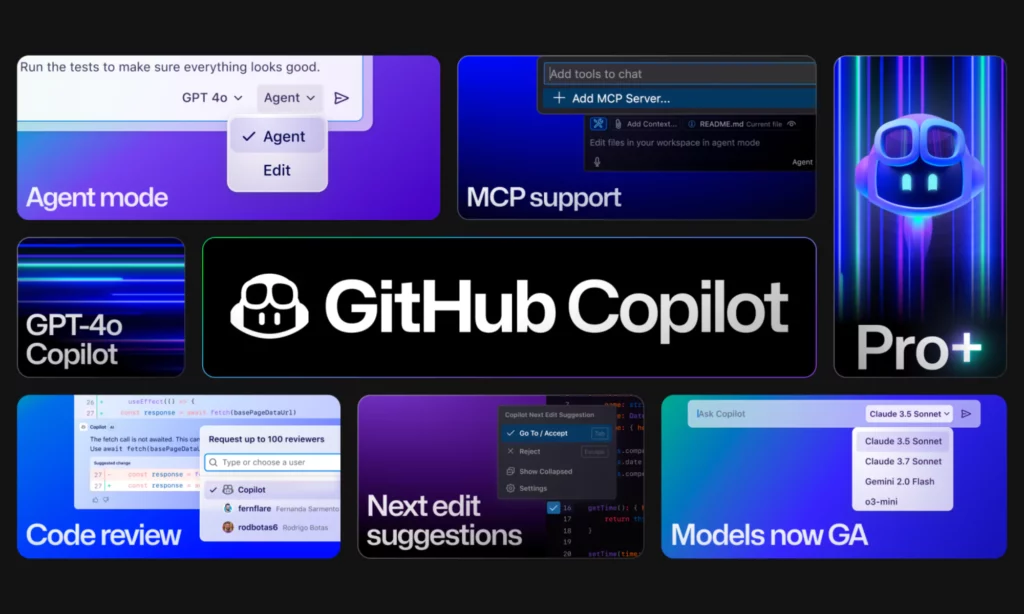

4. GitHub Copilot

GitHub Copilot is an artificial pair programmer co-developed by GitHub and OpenAI. The AI tool offers a wide range of tools to make coding quicker and more effective in various IDEs (Integrated Development Environments) like Visual Studio Code, JetBrains, or Neovim. It uses natural language processing to complete a variety of tasks, typically:

- Code Completion: Automatically recommend lines of code in supported IDEs. If you use VS Code, it even suggests the next edit you’re likely to make auto-recommendation for it.

- Copilot Chat: Answer coding-related questions.

- Coding Agent: Make required changes for a GitHub issue you assigned previously and create a pull request for you to review. Copilot can use AI capabilities to summarize the changes for reviewers to understand the context and impact of these changes quickly. It can even automatically complete your descriptions of pull requests.

- Testing and Debugging: Create unit tests and recommend fixes or corrections within your code.

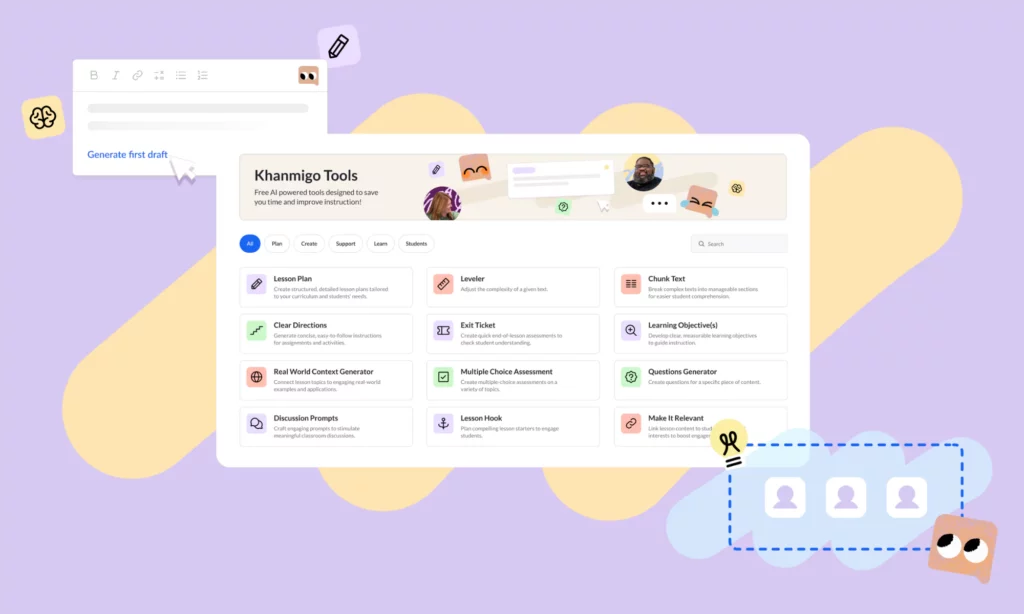

5. Khanmigo

Khanmigo is an AI-powered teaching and tutoring assistant developed by Khan Academy, a trusted education nonprofit.

For Learners and Families: Khanmigo serves as a patient tutor. It guides students to find answers themselves instead of giving solutions like other AI tools. The tool integrates with Khan Academy’s content library to offer a variety of subjects, like maths, coding, social studies, and humanities, regardless of a learner’s educational level. It also offers feedback on your essays and code to encourage your progress.

For Teachers: Khanmigo helps teachers work more seamlessly and quickly. Accordingly, teachers can use Khanmigo to create learning objectives, lesson hooks, and even full lesson plans. It can automatically adjust text complexity and connect lessons with students’ lives. Further, it supports building quizzes for student assessment, drafting class newsletters, and more.

Does GPT Continue to Thrive in the Future?

Now, we’ve seen that GPT models are constantly being fine-tuned, with improvements in multimodality (processing images, audio, and video beyond text), contextual understanding, and accuracy. According to ChatGPT: H1 2025 Strategy, an internal OpenAI strategy document, the company envisions ChatGPT as a super-assistant who can understand your concerns and help you do tasks with emotional intelligence.

Further, we also foresee GPT’s wider adoption across industries. Its capabilities are modified to empower task-specific tools like customer service, education, financial analysis, and more. As the demand for integrating GPT into workflows is increasing, the technology will advance accordingly to meet this need. Besides, giants like Microsoft, Meta, and Google are participating in this AI landscape by developing GPT-like models. This will promote the advancements in GPT to stay ahead of this competitive landscape.

Final Words

This article gave you the fundamentals to answer the big question: What does GPT stand for? As an abbreviation for Generative Pre-trained Transformer, GPT is a powerful technology that enables large language models to create novel content for certain tasks (e.g., translation or article writing). In the future, this model will continue to thrive significantly and become a super assistant in our lives. What do you think about GPT and its impressive growth? Share your idea with us on Facebook, X, and LinkedIn!

Read more topics

You may also like