Voice technology is remaking how we communicate with devices and services. It’s no wonder then that the global voice recognition market is predicted to grow tremendously and is a technology that is ever becoming more embedded in our day-to-day. It provides users with voice technology to operate hands free and speak more naturally to devices.

Take voice assistants like Siri, Google Assistant and Amazon Alexa in homes and offices nowadays. They can do tons of things from setting reminders to controlling home devices. A National Public Media report found that 62 percent of Americans age 18 and older use a voice assistant on any device, including smart speakers, smartphones and car systems.

Voice recognition technology is based on natural language processing (NLP), machine learning, and other complex processes. These processes are hard to understand and implement. They are system that get trained to understand and interpret human speech and commands it in actionable terms. According to a Statista study that shows how the use of voice tool in media companies leads to cut costs and increase productivity.

Voice technology is not limited to personal use as they find a growing place in professional environment. In customer service, voice recognition software is used to receive customer inquiries, while in healthcare it is used to have the patient document. And the versatility and convenience of voice technology as a technology means it can be a powerful force for improving the quality of experience for users throughout multiple industries.

What is Voice Technology?

A voice technology is a system/tool that equips computers and digital devices with the capability to process and respond to human voice commands. Now users can interact with the device using the natural language getting it done hands-free.

In 2022, the global voice recognition technology market was estimated to be well above 12 Billion USD, and is anticipated to reach a value around 50 Billion USD by 2029. Furthermore, 41 percent of US Adults use voice search daily.

According to the statistics, around 8.4 billion people of voice assistant will be by 2024. National Public Media also reported that 57 percent of voice command users use voice commands daily.

Popular voice assistants include Apple’s Siri, Google Assistant, Amazon’s Alexa and Microsoft’s Cortana. With these assistants they can schedule reminders, answer questions and control smart home devices.

It is using voice technology to make our interactions with technology more intuitive and accessible to everyone. The potential for even more innovative applications and improvements in user experience will come with more technology.

The 5 Things You Need to Know about Voice Technology

Voice technology is rapidly moving into the lives of consumers in ways that they are engaging with devices and services. Here are five key things you need to know:

- Voice technology is booming: In 2022 alone, over 12 billion USD had been spent in the global voice recognition market, which is forecasted to reach 50 billion USD by 2029.

- Voice assistants are everywhere: Adults in the US use voice search daily more than 41%, and there are to be more than 8.4 billion voice assistants globally by 2024.

- Smart speakers are leading the charge: More than 30,000 home devices can be controlled with Google Assistant and smart speakers are developing in usage for various purposes.

- Voice technology is improving productivity: Voice technology lowers costs and increases productivity in industries including media.

- Privacy and security remain concerns: With voice technology as a ubiquitous technology we are looking at remaining concerns of security and privacy.

1. Voice technology is booming

Voice technology is exploding. In 2022, the worldwide voice recognition market will generate almost 12 billion U.S. dollars and it is expected to reach almost 50 billion U.S. dollars by 2029. Fueled by the advancement of machine learning and artificial intelligence (AI), and supported by a growing pace of connected devices.

Voice assistants are in the rise. Research further reveals that in the U.S. alone, more than 41 percent of adults utilize voice search everyday; and the total amount of voice assistants on the globe is expected to rise up to 8.4 billion or more by 2024. Voice assistants like Google Assistant and Apple’s Siri, that support over 30,000 home devices, are popular. 45 per cent of the market go with Siri.

Voice technology is used in different industries. Take voice command users, for instance; 57% of users use voice commands daily, and 53% of smart speaker owners respond to ads appearing on their devices. Voice technology is also used in subtitling and closed captioning, which reduce costs and make productivites go up.

Healthcare is also using voice technology. Now Doctors and Clinicians can translate their voice into detailed clinical descriptions that are stored in the Electronic Health Record (EHR) system. By integrating these products together, efficiency and accuracy has increased in the medical coding documentation.

2. Voice assistants are everywhere

Voice technology is now something that people use everyday. By 2024, we’ll have more than eight point four billion voice assistants in use around the world, more than the world itself. This rapid growth is spurred on by the rise of voice assistants as utilised in smartphones, smart speakers, cars and beyond.

They are no longer a novelty, but now are essential tools to many people. For example, 72 percent of people with a voice activated speaker using it on a daily basis. These devices provide ease in convenience for hands free tasks like setting reminders, playing music, controlling smart home devices.

The statistics around voice assistants are also reflective of our usage of voice assistants — 41% of US adults use voice search daily and 57% of voice command users voice commands on a daily basis. The fact that voice technology has become so widely adopted is a good indicator of how much it’s a part of our daily lives.

Voice assistants are also becoming more advanced with natural language processing (NLP) and machine learning making it easier for the voice assistants to understand and respond to user queries. Companies such as Amazon, Google and Apple are also leading the innovation in this field, always improving their voice assistant technologies to enhance the user experience.

Finally, voice assistants have become indispensable elements of our lives, providing readily convenient and effective means at work. As the technology evolves, we will see the voice assistants getting more sophisticated and integrated into our daily lives.

3. Smart speakers are leading the charge

As this works out, voice technology is rendering the modern smart speaker incredibly powerful. New stats show that 62% of Americans 18 and above use a voice assistant on any device, including smart speaker. This also gives an indication of how widely these devices are used for any task.

And smart speakers like Amazon Echo and Google Home have become the heart of smart homes. These devices do all sorts of things like play music, inform of other things like the weather, as well as control other smart devices in your home. Global smart speaker market is expected to witness a CAGR of 19.3 percent during the forecast period 2024-2032 to reach a value of USD 61.4 billion from an estimated USD 15 billion in 2024.

Integrating with other smart devices is one of the main reasons for the popularity of smart speakers. Using voice commands, users can control their smart lights, thermostats and security systems. The convenience and ease of use have made smart speakers a favorite with consumers.

Smart speakers also aren’t just for home use. Besides this, they are also being used in different industries such as healthcare and banking to achieve efficiency and offer better services. For example, voice technology is being deployed in the healthcare industry to support patient care and on the banking side as an enabler of customer support.

Finally, smart speakers are the leading edge of the voice technology revolution, providing convenience, integration and the possibility of a vast range of applications. With the technology maturing, we’ll see even more innovative implementations and greater adoption in the future.

4. Voice technology is improving productivity

Voice technology is transforming workplaces by boosting efficiency and productivity. A recent report reveals that 67% of mobile workers using Voice over Internet Protocol (VoIP) systems report an increase in productivity. This technology allows employees to handle tasks hands-free, making multitasking easier and faster.

Moreover, businesses adopting VoIP systems have seen cost savings between 30% to 50% on communication expenses. This is particularly beneficial for small businesses looking to optimize their budgets. The integration of voice technology in customer service has also reduced response times and improved customer satisfaction rates.

In the healthcare sector, voice technology is streamlining administrative tasks, allowing medical professionals to focus more on patient care. For instance, voice-enabled transcription services are being used to quickly document patient information, reducing paperwork and minimizing errors.

Overall, voice technology is proving to be a game-changer in various industries, enhancing productivity and operational efficiency.

5. Privacy and security remain concerns

While advancements on voice technology continue to be made, the privacy and security issues remain. The World Economic Forum 2023 report shows that 25 p.c. of Americans aren’t planning to make use of voice know-how as a result of they don’t belief it. The reason of this lack of trust is the data protection and transparency. For example; Voice Assistants such as Amazon Alexa and Google Home collect massive amounts of data including background noise and voice prints.

Additionally, voice biometrics — which can be used to personalize the experience — also present significant privacy risks, a 2021 study conducted by the National Institute of Standards and Technology (NIST) states. Meanwhile, the study emphasizes the need for strong security mechanisms to protect such sensitive information. In addition, a survey from Statista shows that in 2020 people are feeling slightly less safe than they were in the past, but worry still remains about privacy and hackability.

These concerns must be addressed by companies, by prioritising data protection and transparency. Strict penalties are in place under the General Data Protection Regulation (GDPR) in EU and other regions. Building user trust and encouraging voice technology to be adopted by everyone is dependent on compliance with these regulations.

To conclude, voice technology is both beneficial and useful, but there are issues of security and privacy that need to be addressed to grow the industry and model we are used to seeing established in the western world.

Top 5 Voice Assistants Right Now

Voice technology has rapidly evolved, and today’s voice assistants are more advanced than ever. Below are the top 5 most capable voice assistants right now.

1. Google Assistant

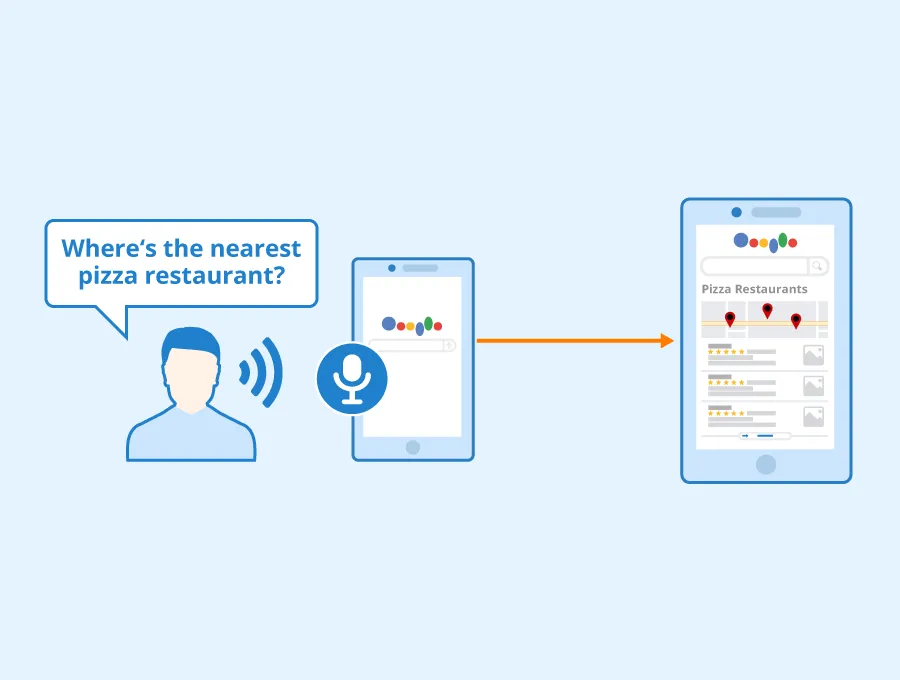

Google Assistant is a leading voice assistant that has gained significant popularity. Different devices supported it, including smartphones, smart speakers, and smart displays. With Google Assistant you can do all kinds of things from asking questions to setting reminders to controlling your smart home to providing real time translations.

Think with Google reports that roughly 70 percent of people asking Google Assistant use natural language — and that’s intuitive and helpful. Over the past year, we’ve seen a quadrupling of the number of active users.

Through setting up alarms, checking up on the weather, or finding a nearby restaurant, Google Assistant can act as an expert in helping users with daily tasks. Moreover, it can also control devices such as lights, thermostats, and security cameras in a smart home, thus enhancing the versatility of home automation.

As Google Assistant continues to grow, so do new features and connections that improve the way users interact with their devices and their daily routine. With its wide spread adoption and continuous improvement, it is by far a best voice assistant you can find around.

2. Amazon Alexa

The world of voice technology has Amazon Alexa as a leading voice assistant. By 2024, approximately 68.2% of smart speaker customers in the United States use Alexa. This cloud based assistant can respond to commands and requests across Amazon devices like Amazon Echo, Amazon Smart Thermostat, and Wyze Cam.

Alexa provides a wide variety of functionality including answering questions, setting alarms, playing music, and controlling smart home devices. The ability of Alexa to support 100,000 smart home devices is a big jump from the 60,000 it provided in 2019. This shows growth in the smart home ecosystem for Alexa.

Where Alexa shines, however, is the amount of third party supported devices and services that it can integrate with. And for example, Alexa allows users to control voice – activated lights, smart TVs, and even smart door locks. Its versatility makes Alexa a great option for anyone who doesn’t want to use their hands to control their smart home.

As for the user demographics, Alexa is predominantly used by people of 35 years of age and older. But younger generations are not frequent users. This trend could indicate that middle aged and older adults find the functionalities of Alexa attractive enough to help with daily tasks and information retrieval.

3. Apple Siri

If you’ve ever wondered about the state of the art in voice assistants, you’ll want to check out Apple Siri. Yesterday, Siri had gotten around 660 million users. Now it supports 17 languages, and is a bit more contextually aware thanks to Apple Intelligence in iOS 18.1.

The latest update includes the app intent system, which empowers developers to get Siri smarter by letting her talk to the onscreen content. It means users can actually ask questions about web pages, documents — even photos — right to Siri. Say you want Siri to summarize a document or to tell you something in a photo.

The new design on Siri brings a glowing animation and now it can also keep on listening to continue the conversation. This way, it is more intuitively and easily readable. Also, Siri voice has been made more natural and less robotic which makes the overall user experience better.

One thing that hasn’t changed, and that the improvements haven’t solved, is Siri overall still needs a lot of work. iOS 18.2 will get integration with ChatGPT, expanding Siri’s abilities even more. Siri is reportedly faster, but it doesn’t quite do as well with more detailed questions.

FURTHER READING: |

1. Progressive Web App Tutorial for Beginners in 2022 |

2. 5 Tips To Choose The Right Web Development Certificate in 2022 |

3. What Is Drupal? All You Need to Know Before Starting |

4. Microsoft Cortana

A powerful voice assistant Microsoft Cortana will help its users to stay organized and productive. It’s part of Microsoft’s lineup with 148 million monthly active users. Features include: Briefing emails with actionable reminders and insights to prepare for meetings and stay on top of one’s schedule.

Built-in to Microsoft To Do and Power BI, Cortana’s integration enables users to keep their tasks in order and see their data insights without ever having to break stride. For instance, users can tell Cortana to locate sales figures or an appointment with a colleague. With voice technology this becomes easier for businesses to circumvent and be more productive.

While small compared to larger speech interfaces like Siri and Google Assistant, Cortana has been able to achieve some impressive things despite its slow user base growth, increasing only 2% since the last 10 months. Still, Microsoft is constantly inventing, having launched the Cortana powered Harman Kardon Invoke smart speaker not too long ago. The device is designed to accelerate Cortana’s momentum and increase its usage.

5. Samsung Bixby

The Samsung Bixby has grown to be a versatile voice assistant and has evolved since its launch. It is used on Samsung devices such as smart phones, tablets, smart watches and extension to home appliances. Features available with Bixby help users experience more and bring more control to devices.

One thing that makes Bixby so great is its tremendous language recognition. Bixby has received the latest updates to its natural language processing and is now better able to understand and process the language as it was intended. This allows users to perform tasks more quickly such as set reminders, text, or control smart home devices.

The extent of Bixby’s customisation options ensures that everything is tailor made for anyone. With Bixby Custom Voice Creator, users can create custom voice commands, change the wake-up phrase and even generate a personalized voice with Bixby. It’s a helpful feature for people who really want some tailor made interaction with their devices.

This integration goes so well with the Samsung ecosystem that Bixby is all at home across devices. Additionally, Bixby can cue users in to begin a workout on Samsung Health, then play music that complements the exercise. This integration makes the overall user experience better and makes it simpler to manage daily tasks.

Offline use also comes with Bixby, through which users can use key commands without internet. It could be anything like that setting a timer, taking a screenshot or turning the flashlight on etc. This feature helps users access Bixby’s functionalities even when they’re not connected to internet.

Plans include introducing generative AI to expand Bixby, as Samsung continues to improve the assistant, a spokesperson said. By making Bixby this versatile and useful, this could allow it to create art, music, and other content on user’s requests.

Conclusion

So, what is voice technology? In short, it’s not just a trend — it’s a force of transformative change impacting entire industries. The applications are many and varied: from healthcare to banking. Being experts in web and software development, Designveloper is up to date on the revolution in this technology, bringing you the most advanced of cutting edge voice solutions. From our VoIP application development service, we’ve shown that we have a strong commitment to making users’ condition better through.

Check out the work we’ve been doing and learn what Voice Technology services we offer that can help you unlock the power of voice technology.

Read more topics