vLLM (very Large Language Model) is an open-source library developed by UC Berkeley for efficient LLM inference and serving on GPUs. It offers multiple features to deliver high throughput and low latency for enterprise-grade, production-ready applications.

At its core, PagedAttention plays a crucial role in managing memory for the attention key-value cache effectively. This attention algorithm splits the cache into small fixed-size pages (like an operating system’s virtual memory pages). These pages can be flexibly reused, shared, and changed.

Beyond PagedAttention, vLLM also comes with:

- continuous batching for fast token generation. This functionality increases LLM inference throughput 23x and reduces p50 latency.

- distributed inference for memory efficiency;

- integrations with high-end GPUs to operate model inference faster and handle multiple concurrent requests efficiently;

- and more!

These features make vLLM best suited for production-ready, enterprise-grade applications rather than just research experiments. If you want seamless performance at scale and minimal latency on very large models, vLLM is the best option.

However, beyond vLLM, there is a variety of alternatives to choose from for seamless LLM inference and serving. Each comes with specific strengths and best use cases.

In this blog post, we offer you a curated list of the best vLLM alternatives and detail how to choose the right one. Let’s get started!

Why Consider Alternatives to vLLM?

vLLM is an excellent option, but not the perfect fit for all cases. Here are some of vLLM’s limitations:

- High hardware requirements: vLLM mainly supports powerful GPUs for fast computations and good VRAM memory. If you often work with very large models or handle an increasing number of concurrent queries, vLLM works best as these tasks require expensive hardware. However, if only CPUs are enough for your applications, using vLLM is quite a waste.

- Memory and context window trade-offs: When you load a language model to vLLM, it by default reserves GPU memory to hold the full context window of the model. Let’s say the model supports 10K tokens, but your prompts are only 1K tokens. Natively, the framework may set aside GPU memory large enough for such 10K tokens. This default reservation can waste memory and lead to an out-of-memory (OOM) error when other things also require VRAM.

- Complex setup: vLLM is considered easy to install and deploy. But for distributed inference across multiple GPUs, the framework requires more technical know-how and setup. This can be a steep learning curve for those less experienced with GPU deployments or ML infrastructure.

Given those pain points, vLLM is not suitable for all situations, especially when you’re only running a few queries or doing fast prototyping on CPU-only setups. That’s why you should consider other vLLM alternatives to handle use cases where vLLM is unnecessary and even a waste.

Top 12 Popular vLLM Alternatives for LLM Inference

If you’re curious about the best vLLM alternatives for LLM inference, don’t miss this section. Here, we detailed 12 popular frameworks and tools similar to vLLM, coupled with their key features and best use cases:

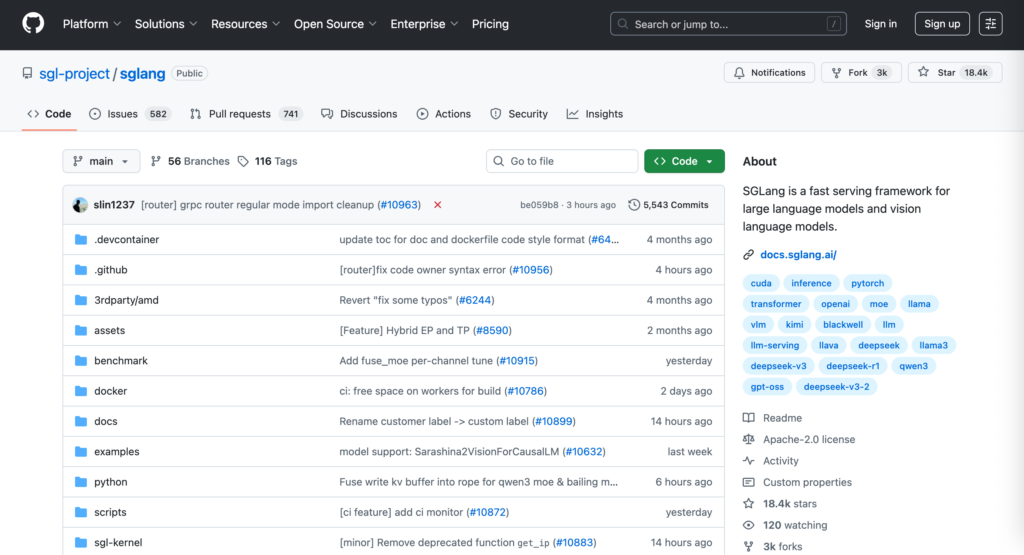

1. SGLang

SGLang is a fast framework for serving LLMs and vision language models (VLMs). The framework enables low-latency responses and allows you to fine-tune a model’s behaviors during inference. By adjusting generation settings, resource allocation, and other parameters, you can have tighter control over SGLang’s performance and output.

Key Features

- Quick Backend Runtime: SGLang comes with multiple features to serve the models more efficiently. These features include Radix for prefix caching, prefill-decode disaggregation, zero-overhead CPU scheduler, tensor/pipeline/data/expert parallelism, continuous batching, speculative decoding, and more.

- Flexible Frontend Language: SGLang provides a user-friendly interface to program LLM applications with ease. This interface tells what the language models should do through functionalities like chained generation calls, multi-modal inputs, advanced prompting, parallelism, control flow, and external interactions.

- Multi-Model Support: SGLang integrates seamlessly with embedding models (E5, GTE-Qwen2, BGE, CLIP, etc.), generative models (Llama, GPT, Qwen, etc.), rerankers (BGE-Reranker), and reward models (Skywork). Further, it offers extensibility to integrate new models easily.

- Flexible Hardware Options: SGLang can run locally or on cloud servers. For local deployments, you can install and run the framework on different hardware platforms, including AMD GPUs, Blackwell GPUs, CPU servers, TPU, NVIDIA Jetson Orin, and Ascend NPUs.

- Offline Capability: SGLang offers the Offline Engine API to run LLM and VLM inference directly without the need for additional HTTP servers, which can add unnecessary complexities or overhead.

Best Use Cases

SGLang works best:

- For situations requiring high throughput and low latency. Some real-world applications of SGLang include real-time translation, interactive conversational AI, and GenAI backends.

- If you want to handle multimodal tasks, process complex prompts effectively, and create synthetic data for model training or testing.

Pricing Models

Open-source under Apache 2.0.

2. TensorRT-LLM

TensorRT-LLM is an open-source NVIDIA library that optimizes and speeds up the inference performance of LLMs on NVIDIA GPUs. The library is built to be modular and easy to adjust. Whether you want to experiment with LLMs, optimize existing LLM deployments, or build advanced AI applications, TensorRT-LLM has all the tools and functionalities to achieve your goals with generative AI.

Key Features

- PyTorch-Native Architecture: TensorRT-LLM is architected on PyTorch and allows you to experiment with the runtime or extend functionality.

- Python LLM API: The library offers a high-level Python LLM API to support different inference setups, whether you want to deploy the library on single GPUs, multi-GPU servers, or multi-node systems. This LLM API also works seamlessly with a wide inference ecosystem, including NVIDIA Dynamo and the Triton Inference Server.

- Advanced Optimization & Production Features: TensorRT-LLM includes various built-in capabilities to help LLMs perform inference efficiently on NVIDIA GPUs. These features cover in-flight batching, speculative decoding, KV cache management, advanced quantization (optimized FP4 and FP8 kernels), chunked prefill, and more.

- Comprehensive Model Support: TensorRT-LLM integrates seamlessly with popular language and multimodal models, including gpt-oss, Deepseek-R1/V3, Llama 3.2, and more.

- Latest GPU Architecture Support: The library supports a wide range of the latest NVIDIA GPU architectures, typically Blackwell, Hopper, Ada Lovelace, and Ampere.

Best Use Cases

TensorRT-LLM works best if your app requires high throughput and performance on NVIDIA GPUs. With its built-in features, the library can accelerate LLM inference in enterprise-grade situations, like real-time content generation, interactive chatbots, or live document summarization.

Pricing Models

Open-source under Apache 2.0.

3. Hugging Face Text Generation Inference (TGI)

HuggingFace Text Generation Inference (TGI) is a toolkit that deploys and serves LLMs locally. TGI works well with various hardware platforms from NVIDIA, AMD, Inferentia, Intel, Gaudi, and Google.

As the name suggests, this toolkit mainly supports popular open-source LLMs in generating text with high throughput and performance for production-ready applications. But it also serves optimized vision language models to handle multimodal data tasks. Some common models integrated with this framework include Deepseek V2/V3, Gemma, Llama, Mistral, Qwen, GPT-NEOX, etc.

Key Features

- Simple Deployment: TGI provides a simple launcher to serve common language models without the need for heavy configuration.

- API Compatibility: TGI comes with the Messages API that uses the same interface as OpenAI’s Chat Completion API. So, you can swap OpenAI calls with TGI without breaking or changing your code.

- Increased Throughput: TGI has various advanced features to ensure high performance and scalability. In particular, its Continuous Batching clusters incoming requests dynamically to keep GPUs busy and increase overall throughput. Tensor Parallelism divides a single large model across various GPUs, so they can work concurrently and reduce inference time. Meanwhile, its Token Streaming (SSE) delivers generated tokens to the client as soon as they’re ready, allowing users to see responses in real-time.

- Optimized Inference: TGI offers Flash Attention and Paged Attention to implement high-speed, memory-efficient attention that accelerates inference.

- Quantization Options: TGI provides different algorithms and formats (`bitsandbytes`, GPT-Q, AWQ, fp8, EETQ, etc.) to meet different needs for memory efficiency and accuracy.

- Output Quality & Control: TGI controls the quality of results with Speculative Coding, Guidance, Log Probabilities, and more. For example, Guidance defines the output format (e.g., JSON) based on specific conditions.

Best Use Cases

Hugging Face Text Generation Inference (TGI) works best for various production-ready text generation applications. These use cases span from conversational AI and content creation to document translation and summarization.

Pricing Models

Open-source under Apache 2.0.

4. OpenLLM

OpenLLM is one of the best open-source vLLM alternatives.

It supports you in deploying and running open-source LLMs on-premises or on cloud servers. Besides, it lets you add your own model repository to operate custom models as OpenAI-compatible APIs using a single command.

OpenLLM doesn’t work as a standalone product, but as a component integrated with other powerful tools (e.g., LangChain or BentoML) to create more complex AI applications. The tool is developed with advanced inference and serving techniques for high throughput and low latency, even under multiple concurrent requests. This helps you deploy LLMs more efficiently with OpenLLM.

Key Features

- Intuitive Chat UI: OpenLLM offers a built-in chat UI at the `/chat` endpoint, cutting-edge inference backends, and a simplified workflow for implementing enterprise-grade cloud deployment with Kubernetes, Docker, and BentoCloud.

- Ready-Made LLM Service Layer: OpenLLM comes with built-in functionalities for production use, from REST and gRPC APIs, query management, and quantization options to authentication, and deployment tooling.

- Self-Hosted Flexibility: OpenLLM allows you to deploy language models on your own system to get full control over data privacy and performance.

- Multi-GPU Serving: OpenLLM can serve large models on multiple GPUs. It also provides the `workers-per-resource` option that allows you to set the number of worker processes each GPU can run. If your machine has NVIDIA GPUs and you’ve already installed nvidia-docker, you can run the server with GPU acceleration.

- Quantization: OpenLLM supports multiple compression techniques (e.g., AWQ, LLM.int8(), SpQR, GPTQ, and SqueezeLLM) to reduce memory usage and accelerate inference.

Best Use Cases

OpenLLM is ideal if you want a full-featured application server to run and manage LLMs instead of a fast inference engine for content generation.

The tool already offers ready-to-use APIs and production-ready monitoring tools, so you don’t need to code your own API or set up logging and scaling yourself. Further, it hosts and monitors various models or backends in one place, as well as supports version control and multi-user access.

With all these capabilities, OpenLLM gives you a one-stop solution for production use.

Pricing Models

Open-source under Apache 2.0.

5. DeepSpeed

Developed by Microsoft, DeepSpeed has gained traction as a deep learning optimization library that optimizes and facilitates distributed training and inference.

Instead of manually distributing complex model training across various GPUs, you can implement this task easily with DeepSpeed’s ready-made scripts and configuration tools. One research showed that DeepSpeed can train language models 15x faster than other best RLHF (Reinforcement Learning with Human Feedback) approaches.

DeepSpeed works on the following four innovation pillars:

- Training comes with techniques, like ZeRO (Zero Redundancy Optimizer), ZeTO-Infinity, and 3D-parallelism, to facilitate and streamline large-scale model training.

- Inference provides parallelism innovations, high-performance custom inference kernels, unique memory technologies, and more to boost inference time at scale and reduce costs.

- Compression includes optimized techniques to shrink the size of models and increase the inference efficiency.

- DeepSpeed4Science offers unique capabilities to support researchers.

Based on these innovation pillars, the DeepSpeed team at Microsoft developed a software suite, known as the DeepSpeed library. This comprehensive library packages innovative technologies in DeepSpeed Training, Inference, and Compression into an open-source repository.

Key Features

- Model Parallelism: DeepSpeed offers a wide range of parallelism technologies to fit large models into GPU memory. They include like tensor, pipeline, expert, and ZeRO-parallelism. Typically, ZeRO-parallelism partitions the various model training states across devices (GPUs and CPUs) to reduce memory usage and computational costs required by each device.

- MII (Model Implementations for Inference): This open-source repository supports thousands of common deep learning models and reduce the need to use complex system optimization techniques manually. It, therefore, democratizes high-throughput and low-latency inference among data scientists.

- Customized Inference Kernels: DeepSpeed provides tailored inference kernels for Transformer blocks through operator fusion to gain high compute efficiency.

- Mixture of Quantization (MoQ): To further reduce the inference cost for large-scale models, DeepSpeed uses a new quantization approach called MoQ . This approach controls the model’s accuracy and supports flexible quantization policies and schedules at production.

- Multi-GPU Inference: DeepSpeed lets you run inference on multi-GPU for compatible transformer-based models without hassle. Accordingly, you only need to offer the model parallelism degree and the checkpoint information or the model already loaded from the checkpoint, then DeepSpeed will handle the rest.

Best Use Cases

Choose DeepSpeed if you want to:

- Train or inference sparse/dense models that contain up to billions or trillions of parameters, but on resource-limited GPUs.

- Gain very high system throughput and seamless scalability across thousands of GPUs.

- Compress vectors for low inference latency and with low costs

Pricing Models

Open-source under Apache 2.0.

6. Ollama

Ollama is an open-source tool letting you download, install, customize, and operate open-source large language models (LLMs) like gpt-oss, Llama 3.2, LlaVA, or Mistral locally. It functions as a library where you can set up and store LLMs to perform specific tasks, like text generation, coding, or research support.

The 2025 StackOverflow Survey indicated that 15.4% of developers have used Ollama in the past year, while nearly 60% plan to work with this tool in the future.

Ollama works by running LLMs in a separate environment on your local machine. This helps avoid possible clashes with the rest of your system. In this environment, a model has everything to perform a task, like pre-trained data, customizable configurations (that define the LLM’s behaviors), and other crucial dependencies (that streamline the model’s performance).

Key Features

- Local Model Management: Ollama gives you full control over LLM management on local devices, hence removing dependence on cloud services and reducing associated risks (e.g., data leaks). You can download, update, tailor, and delete models easily on Ollama. Further, the framework allows you to switch and monitor different model versions to test their performance on particular tasks.

- Offline Functionality: Ollama allows you to run LLMs offline, especially when you have to work with limited connectivity or require strict data control.

- Command-Line and GUI Options: Ollama mainly offers a command-line interface (CLI) to run, train, and manage language models. If you don’t have much command-line expertise and want to visualize your work, use external GUI tools (e.g., Open WebUI) integrated to operate Ollama.

- Modelfile Customization: Ollama offers the Modelfile syntax to set particular prompts, parameters, and system messages for model customization.

- Multi-Platform Support: You can download and run Ollama across systems, including Linux, macOS, and Windows.

- Multi-Model Support: Ollama supports the seamless integration with various third-party models for embedding, multimodal, and thinking. Some popular models hosted in Ollama include gpt-oss, EmbeddingGemma, Qwen3, and Llama 3.2.

Best Use Cases

Ollama works best:

- For fast prototyping and local LLM experimentation.

- If your hardware is limited and your LLM project requires CPU setups or consumer-grade GPU servers to run models.

- When your team prioritizes data privacy and prefers working with completely isolated environments to run the models locally.

Pricing Models

Open-source under MIT.

7. MLC LLM

MLC LMM is another high-performance vLLM alternative that comes with LLM compilation. It aims to let developers build, optimize, and run machine learning models natively on their platforms.

Key Features

- MLCEngine: At its core, MLC LLM uses MLCEngine to compile and run code. This is a unified high-performance engine for LLM serving and inference across platforms. It offers OpenAI-Compatible API, so you can interact with the engine like with the OpenAI API. You can access the API through a REST server, in Python/JavaScript code, or inside iOS/Android apps.

- Machine Learning Compilation: MLC LLM uses ML compilation, including optimized attention kernels and a portable runtime for GPU/CPU inference. Through systems like Apache TVM, MLC LLM uses compiler acceleration to create backend-specialized optimizations for high-performance native LLM deployments.

- Quantization Support: MLC LLM by default uses a specific algorithm called grouping quantization. This algorithm divides model weights into small groups, each of which is then compressed together. This reduces memory usage while ensuring high accuracy.

- Flexible Hardware Integration: MLC-LLM allows you to deploy AI models across different hardware platforms, from computer OS (Linux, macOS, Windows) and mobile OS (iOS, Android) to web browsers.

Best Use Cases

MLC LLM is ideal:

- If you want to exploit full potential of GPUs to accelerate complex computations for LLMs.

- When you want to natively download, customize, and run LLMs on your hardware platforms.

Pricing Models

Fully open-source under Apache 2.0.

8. llama.cpp

llama.cpp is an open-source library written in C/C++ for efficient LLM inference and serving on consumer-grade hardware. The library was created by Georgi Gerganov in early 2023 with the main goal of rewriting Meta’s LLaMA inference code entirely in C/C++ without external dependencies. In 2025, this open-source project received huge interest from the GitHub community with 86.7K stars.

Key Features

- Wide Hardware Support: llama.cpp can run on various CPU/GPU platforms, like x86, ARM, Apple Metal, CUDA, or CANN. These backends form the underlying GGML tensor library, which llama.cpp uses to perform heavy computations. Further, the library also leverages CPU extensions (e.g., AVX, AVX2, and AVX-512 for X86-64 and Neon on ARM) for speed optimization.

- Edge-Friendly Features: llama.cpp includes many features to run LLMs on edge devices efficiently. These features include ahead-of-time model quantization, on-the-fly KV-cache quantization, speculative decoding, and partial layer offloading.

- APIs for Front-End Integration: llama.cpp supports various APIs and features for front-end communication, including OpenAI-style endpoints and Grammar-based output.

- GGUF File Format: GGUF (GGML Universal File) is a binary file format to keep a model’s tensors and metadata. It helps llama.cpp save/load fast and ensures backward compatibility when new model types appear.

Best Use Cases

llama.cpp works best when your team prioritizes performance, control, and local deployments on consumer-grade hardware.

Pricing Models

Open-source under MIT.

9. LMDeploy

LMDeploy is another excellent alternative for vLLM. Developed by the MMRazor and MMDeploy teams, this toolkit has the main goal of quantizing, deploying, and serving LLMs and VLMs (e.g., Llama, InternLM, LLaVA, or Qwen-VL).

Key Features

- Efficient Inference: LMDeploy introduces innovative features, like continuous batching, blocked KV cache, tensor parallelism, dynamic split/fuse, high-performance CUDA kernels, etc. These features allow LMDeploy to deliver 1.8x higher query throughput than vLLM. Besides, LMDeploy also directly supports offline inference through its Offline Inference Pipeline.

- Quantization Support: LMDeploy supports different quantization techniques, including AWQ, GPTQ, SmoothQuant, and INT4/INT8 KV cache. According to the creators, the 4-bit inference (turbomind-w4a16) performs 2.4x better than FP16.

- Distribution Server: LMDeploy allows you to distribute requests across multiple machines and servers. This keeps multi-model deployments easy and efficient.

- TurboMind & PyTorch as Backends: LMDeploy uses TurboMind and PyTorch as inference backends. TurboMind helps the tool optimize inference performance, while PyTorch is purely built in Python to make LMDeploy accessible to developers of all levels. These engines support different inference data types and models.

Best Use Cases

LMDeploy is an excellent choice for:

- High-performance inference at scale

- Online and offline deployments for quick local experimentation or production-ready microservices

- Large models running on limited GPU resources.

Pricing Models

Open-source under Apache 2.0.

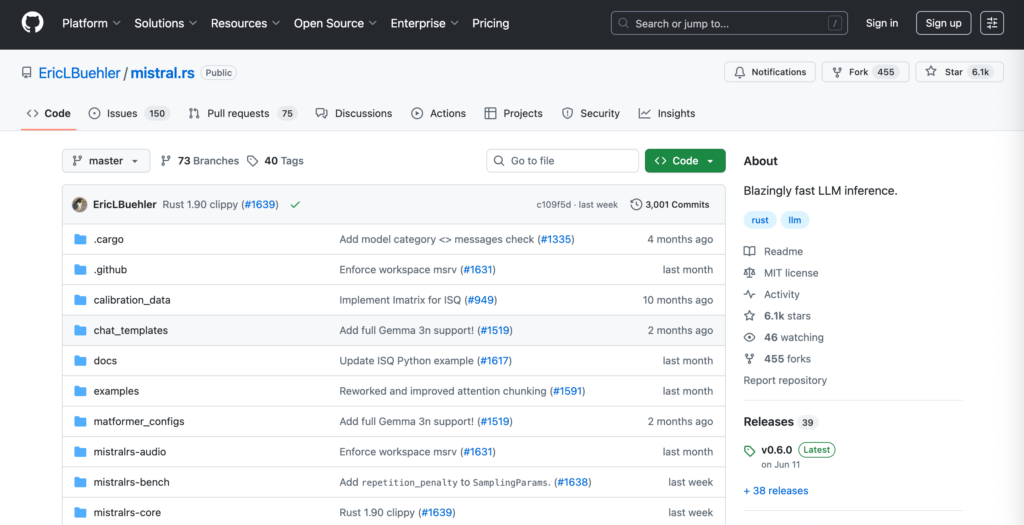

10. Mistral.rs

Mistral.rs is an extremely fast LLM inference engine. It works across platforms on multimodal workflows. The engine offers diverse deployment options, through Python APIs, Rust APIs, the OpenAI-Compatible HTTP server, and the interactive mode. The good news is that Mistral.rs now allows you to use MCP (Mocel Context Protocol) to connect your local models with external tools and services automatically.

Key Features

- High Performance: Mistral.rs supports CPU/GPU acceleration and automatic tensor parallelism strategies that divides models across numerous devices to boost performance. Accordingly, Mistral.rs supports two distributed inference strategies: NCCL for CUDA and Ring backend for all devices. Besides, Mistral.rs also uses automatic device mapping to prioritize loading models into GPU memory and the rest into CPUs.

- Effective Quantization: Mistral.rs includes various quantization techniques, including KV cache, GPTQ, AWQ, GGML, ISQ (In-place quantization), and more. The tool automatically chooses the fastest compression method or lets you customize the quantization strategy to balance speed, accuracy, and memory usage for your hardware.

- Flexibility: Mistral.rs comes with AnyMoE, advanced fine-tuning with LoRA and X-LoRA, automatic prompt chunking for large inputs, and integrated tool/API calling. These functionalities makes Mistral.rs adaptable to various real-world applications.

- PagedAttention & FlasAttention V2/V3 for High Throughput: These optimized algorithms help Mistral.rs define the model’s read and write operations, hence increasing inference speed and optimizing GPU memory.

- Prefix Caching: This feature keeps the already-processed data (including multimodal), so your model doesn’t need to re-calculate it each time. This helps lower latency and memory usage.

Best Use Cases

Mistral.rs is a good option for applications requiring:

- Native Rust integration

- Multimodal capabilities

- High-performance local inference

- Tool use and agentic workflows

- Cross-platform compatibility

Pricing Models

Open-source under MIT.

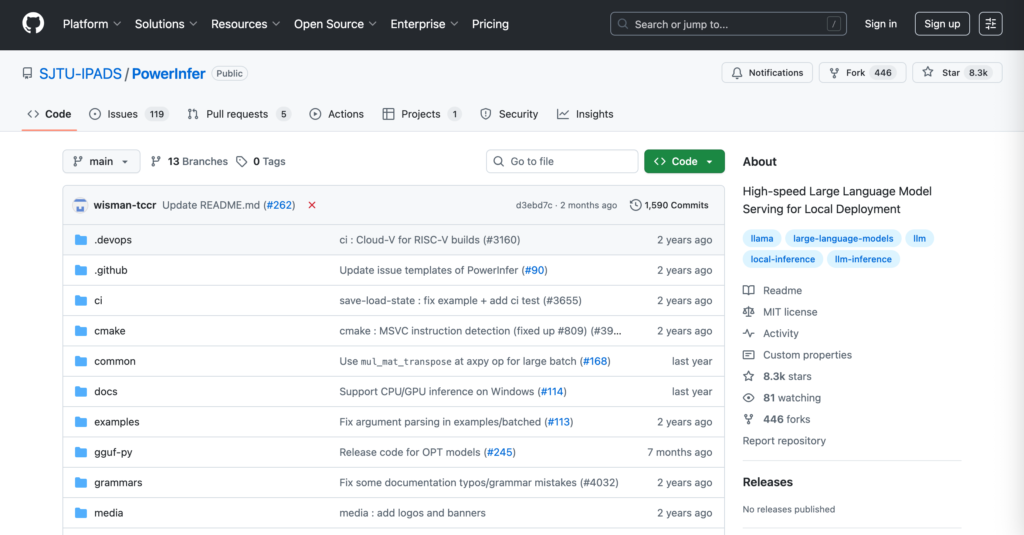

11. PowerInfer

PowerInfer is a fast engine for LLM inference and serving on consumer-grade GPU devices. It aims to give you near-data-center performance without investments in expensive server hardware.

To achieve that goal, PowerInfer stores frequently searched vectors permanently on GPU memory, while keeping rarely used data on CPU. This hybrid inference engine helps process the always-needed “hot inputs” quickly and only compute less-frequent “cold inputs” when required. For this reason, PowerInfer can cut down CPU-GPU data transfers and GPU memory usage.

Key Features

- Locality-Centric Design: PowerInfer uses the hot/cold neuron concept to increase LLM inference speed and cut down resource usage. Further, it adopts neuron-aware sparse operators to skip computations for neurons unlikely to activate, while employing adaptive predictors to predict which neurons will become “hot” next. These features ensure efficient LLM inference.

- Hybrid CPU/GPU Use: PowerInfer leverages the calculation capabilities of CPU and GPU to boost processing speed and ensure balanced workloads.

- Local Deployment: PowerInfer is built for local deployment on ordinary computers equipped with modest GPUs. This design allows for low-latency LLM inference and serving on a single GPU.

- Easy Integration: PowerInfer integrates seamlessly with more than 100 ReLU-sparse models for text generation.

Best Use Cases

PowerInfer works best for local LLM deployments on consumer-grade GPUs.

Pricing Models

Open-source under MIT.

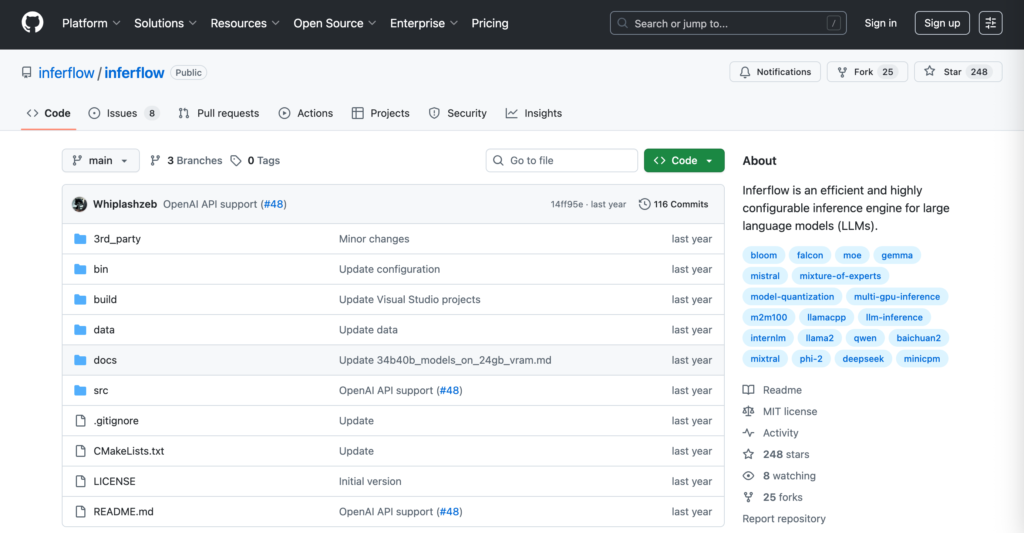

12. Inferflow

The last vLLM alternative we want to mention today is Interflow. This is a highly configurable engine for LLM inference and serving. It allows you to simply modify some command lines in configuration files corresponding to the transformer models you want to work with. This removes the need to write a single line of source code.

Key Features

- Modular Framework: Interflow uses independent atomic building blocks for tasks like model loading, memory management, and inference scheduling. With the modular framework, you can easily plug in new components to change model architectures without rewriting the whole system.

- Quantization: Interflow uses different quantization schemes, including 2-bit, 3-bit, 3.5-bit, 4-bit, 5-bit, 6-bit, and 8-bit. Among them, 3.5-bit quantization is newly introduced to handle the limitations of 3-bit and 4-bit quantization. Accordingly, this new quantization technique can ensure good precision while increasing speed and reducing memory.

- Hybrid Model Partitioning for Multi-GPU Inference: Interflow’s hybrid method combines layer and tensor partitioning. It dynamically decides which parts to split by layer and which by tensor to balance latency and throughput.

- GPU/CPU Hybrid Inference: Interflow supports CPU-only, GPU-only, and GPU/CPU hybrid inference.

- Diverse File Formats: Interflow supports different models to load multiple file formats (e.g., pickle, safetensors, llama.cpp gguf, etc.). Further, the engine uses a simplified pickle parser written in C++ to support the safe loading of pickle data.

- Multiple Network Types: Interflow integrates with three types of transformer models, including decoder-only, encoder-only, and encoder-decoder.

Best Use Cases

Interflow works well if you want to:

- Deploy and run very large models across multiple GPUs

- Cut GPU memory without sacrificing much accuracy.

- Experiment with new LLM architectures or new inference optimizations.

Pricing Models

Open-source under MIT.

FURTHER READING: |

1. Considerations for Using DevOps Managed Services |

2. 10 Best Back-End Developer Courses for You in 2025 |

3. What Does a Back-End Developer Do? A Detailed Guide |

Decision Framework: How to Choose the vLLM Alternatives for Your Project

Swapping vLLM for something else isn’t just about evaluating speed benchmarks. There’s a bunch to untangle. The right interference framework must meet your ultimate goals, infrastructure requirements, and budget. So, how can you choose the right vLLM alternatives for your project? Let’s take a quick look at our tips as follows:

1. Clarify your core requirements

First off, write down what specific use cases you want an inference tool to deal with. Is it about real-time chat, multimodal tasks, or batch generation?

Then, consider model type and size. Are you serving 7B or 70B+ models? Some LLM inference engines excel at handling small to mid-size models, while others specialize in very large ones.

Finally, identify your preferred deployment environment. Is that on-premises, cloud, or edge devices?

These clear factors help you define your core requirements easily.

2. Evaluate performance at scale

You want speed, sure. But the throughput and latency capabilities of vLLM alternatives must align with the context of your work.

So, when evaluating an engine’s performance at scale, consider:

- How fast and consistently the engine generates tokens, even under heavy load.

- Whether the engine offers features like PagedAttention or tensor parallelism to optimize memory efficiency, especially when handling large models.

- How easily the engine adds GPUs or servers when traffic increases.

3. Check ecosystem and compatibility

A rich ecosystem can reduce development and deployment time. So, consider:

- Whether the engine enables API compatibility.

- Which frameworks or tools the engines natively supports (e.g., PyTorch, HuggingFace Transformers, or LangChain).

- Whether the engine has an active and strong community support.

4. Don’t ignore operational factors

Review how the framework supports operations through the following features:

- Which deployment tooling does the framework offer to reduce setup complexity?

- Does the framework come with built-in monitoring tools and performance metrics to evaluate its performance over time?

- Which resources (CPU-only, multi-GPU, etc.) does the framework provide for cost control and memory efficiency?

5. Test on pilot projects

If an inference engine looks good on paper, that probably means nothing. So, test it on a small pilot before committing.

Accordingly, you should:

- Run a representative model and workload.

- Measure latency, throughput, developer effort, and other crucial factors.

- Get feedback from both engineers and end-users.

Conclusion

vLLM is one of the most common LLM inference and serving engines. It works best for very large language models on high-end GPUs. Although this engine is powerful, this doesn’t mean it suits every use case. That’s why you should consider other best vLLM alternatives depending on your specific applications.

Through this article, we hope you have a comprehensive overview of the best vLLM alternatives in today’s era. Choose the right one based on your core requirements about infrastructure, budget, and use cases.

Are you also looking for a reliable, experienced partner to develop LLM-based applications using such inference frameworks? Contact Designveloper!

We are the leading software and AI development company in Vietnam, with 12 years of hands-on experience in building simple chatbots to complex agentic systems across industries. Our 100+ skilled professionals have strong technical and UX capabilities to create custom, scalable AI solutions that fit your specific requirements.

We have mastery of LangChain, OpenAI, and other cutting-edge technologies to develop customer service chatbots that automate support ticket triage. We also built medical and financial analysis assistants that prioritize data security while offering contextually relevant answers to users.

With our proven track record, excellent communication, and Agile approaches, we commit to delivering the best outcomes on time and within your budget. Want to revolutionize your existing infrastructure with the latest AI technologies? Designveloper is eager to help!

Read more topics

You may also like